Navigating the Regulatory Storm: The ACTS AI Moratorium and Its Implications for Health Tech Innovation

The One Big Beautiful Bill Act (OBBBA) represents a watershed moment in American artificial intelligence regulation, proposing an unprecedented 10-year moratorium on state-level AI legislation enforcement. For health technology entrepreneurs, this development presents both unprecedented opportunities and significant challenges that will reshape the industry landscape for the next decade. This essay examines the multifaceted implications of OBBBA's AI provisions through the lens of healthcare innovation, analyzing how a federal regulatory framework could simultaneously accelerate market entry while creating new compliance complexities. The analysis reveals that while the moratorium promises to reduce the current patchwork of state regulations that burden emerging health tech companies, it also introduces regulatory uncertainty that could fundamentally alter patient trust, clinical adoption patterns, and competitive dynamics in the healthcare AI sector. Health tech entrepreneurs must prepare for a paradigm shift that prioritizes federal oversight over state-level governance, requiring strategic repositioning of compliance frameworks, product development cycles, and market entry strategies.

Table of Contents

1. Introduction: The Regulatory Crossroads

2. Understanding OBBBA: Deconstructing the Federal Framework

3. The Current State Regulatory Landscape: A Fragmented Foundation

4. Implications for Health Tech Innovation and Market Dynamics

5. Patient Safety and Trust: The Double-Edged Sword of Deregulation

6. Competitive Advantage in a Unified Regulatory Environment

7. Strategic Positioning for Health Tech Entrepreneurs

8. Constitutional and Legal Challenges: Navigating Uncertain Waters

9. Future Scenarios and Market Predictions

10. Conclusion: Charting a Course Through Regulatory Transformation

---

The Regulatory Crossroads

The healthcare technology sector stands at a pivotal juncture as Congress contemplates legislation that could fundamentally reshape the regulatory environment governing artificial intelligence in healthcare. The One Big Beautiful Bill Act, passed by the House of Representatives in a narrow 215-214 vote, contains provisions that would impose a decade-long moratorium on the enforcement of state and local AI regulations, creating a regulatory vacuum that could either unleash unprecedented innovation or expose patients to unforeseen risks. For health tech entrepreneurs who have spent years navigating an increasingly complex maze of state-specific AI regulations, this proposed federal intervention represents both the promise of simplified compliance and the peril of regulatory uncertainty.

The healthcare AI market, valued at approximately forty-five billion dollars in 2024 and projected to exceed one hundred forty-eight billion dollars by 2029, operates within one of the most heavily regulated industries in the global economy. The intersection of healthcare regulation and emerging AI governance has created a uniquely challenging environment for entrepreneurs seeking to develop, deploy, and scale innovative health technologies. State governments have increasingly stepped into this regulatory void, creating a patchwork of requirements that vary dramatically across jurisdictions and impose significant compliance burdens on companies attempting to operate at scale.

The proposed moratorium emerges from a broader philosophical tension between innovation-first policies that prioritize market development and precautionary approaches that emphasize patient safety and regulatory oversight. This tension is particularly acute in healthcare, where the stakes of AI deployment extend far beyond commercial considerations to encompass fundamental questions of life, death, and human dignity. Health tech entrepreneurs operating in this space must therefore grapple not only with traditional business challenges but also with the profound ethical and regulatory implications of their technological innovations.

The timing of OBBBA's emergence is particularly significant given the current state of healthcare AI adoption. Healthcare providers are increasingly integrating AI tools across clinical, operational, and administrative functions, from diagnostic imaging and clinical decision support to revenue cycle management and patient engagement platforms. This rapid adoption has occurred largely in the absence of comprehensive federal AI governance, creating a regulatory landscape where state-level initiatives have attempted to fill the void through targeted legislation addressing specific AI applications in healthcare contexts.

For health tech entrepreneurs, the current regulatory environment presents both obstacles and opportunities. The complexity of navigating multiple state regulatory frameworks has created barriers to entry for smaller companies while potentially advantaging larger organizations with greater compliance resources. Simultaneously, the absence of clear federal standards has created uncertainty that may discourage investment and slow adoption of beneficial AI technologies in healthcare settings. The proposed moratorium seeks to address these challenges by creating a unified regulatory environment, but it also introduces new uncertainties about the adequacy of federal oversight and the potential consequences of preempting state-level protections.

Understanding OBBBA: Deconstructing the Federal Framework

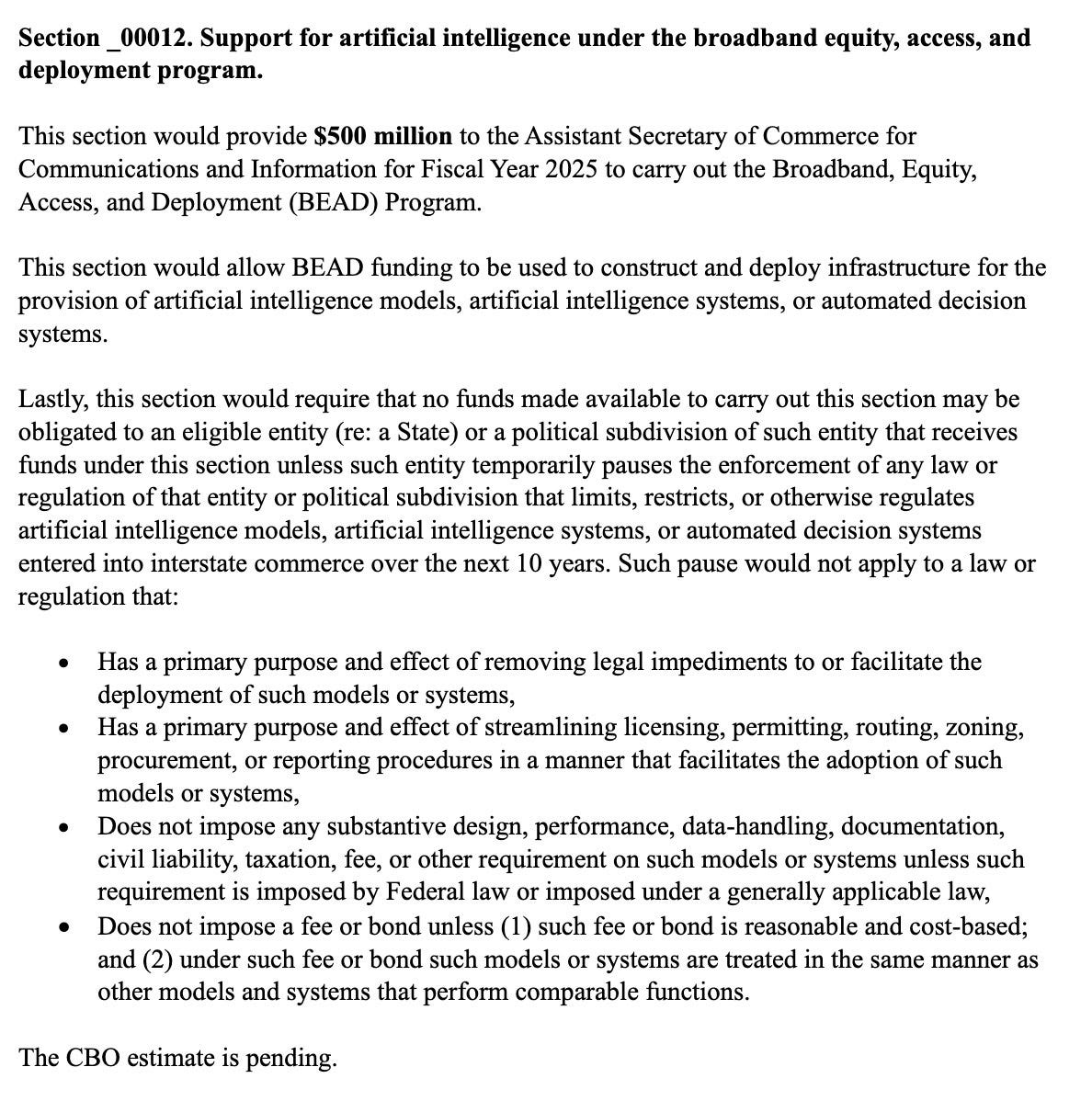

The One Big Beautiful Bill Act's artificial intelligence provisions represent one of the most significant federal interventions in AI governance since the technology's emergence as a transformative force in healthcare. Section 43201 of OBBBA establishes a comprehensive framework that would fundamentally alter the relationship between federal and state authority in AI regulation, creating a de facto federal monopoly on AI governance for the next decade. Understanding the specific mechanisms and exceptions within this framework is crucial for health tech entrepreneurs seeking to navigate the potential regulatory transformation.

The Act's definition of artificial intelligence reflects a deliberately broad approach that encompasses virtually all forms of machine learning and automated decision-making systems currently deployed in healthcare settings. By defining AI as "a machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations, or decisions influencing real or virtual environments," OBBBA captures everything from simple clinical decision support tools to sophisticated large language models used for patient communication and care coordination. This expansive definition ensures that the moratorium's effects will be felt across the entire spectrum of healthcare AI applications, from established technologies like medical imaging analysis to emerging applications in personalized medicine and predictive analytics.

The inclusion of "automated decision systems" within the Act's scope further broadens its impact by encompassing "any computational process derived from machine learning, statistical modeling, data analytics, or AI that issues a simplified output to materially influence or replace human decision making." This definition effectively covers the vast majority of health tech products currently in development or deployment, including risk stratification algorithms, treatment recommendation engines, and population health management platforms. For health tech entrepreneurs, this comprehensive scope means that virtually all AI-enabled products will fall under the moratorium's provisions, eliminating the possibility of avoiding its effects through narrow technical distinctions.

However, the Act's three exceptions create significant complexity and potential loopholes that could substantially limit the moratorium's practical impact. The Primary Purpose and Effect Exception allows state regulations to remain enforceable if their primary purpose involves removing legal impediments, facilitating deployment, or consolidating administrative procedures related to AI adoption. This exception creates a pathway for states to maintain regulatory authority by framing their AI laws as innovation-enabling rather than restrictive, potentially undermining the moratorium's goal of creating regulatory uniformity.

The No Design, Performance, and Data-Handling Imposition Exception represents perhaps the most significant limitation on the moratorium's scope. By exempting state laws that do not impose substantive requirements on AI systems unless those requirements are imposed under federal law or are generally applicable to similar systems, this exception essentially preserves state authority over AI regulation as long as it is exercised through generally applicable laws rather than AI-specific statutes. For health tech entrepreneurs, this means that states could potentially regulate AI systems through existing healthcare, privacy, consumer protection, or professional licensing frameworks, maintaining much of their current regulatory authority while technically complying with the moratorium.

The Reasonable and Cost-Based Fees Exception further narrows the moratorium's impact by allowing states to impose fees and bonds on AI systems as long as they are reasonable, cost-based, and applied equally across comparable AI applications. This exception acknowledges the legitimate fiscal interests of state governments while attempting to prevent discriminatory fee structures that could burden AI innovation. However, the subjective nature of "reasonable and cost-based" determinations creates potential for ongoing disputes and regulatory uncertainty that could undermine the moratorium's goal of providing clarity to health tech entrepreneurs.

These exceptions collectively suggest that the moratorium's practical impact may be significantly more limited than its broad language initially suggests. Rather than creating a complete federal monopoly on AI regulation, OBBBA may simply require states to exercise their regulatory authority through different legal mechanisms while maintaining much of their substantive control over AI deployment in healthcare settings. This dynamic creates a complex regulatory environment where health tech entrepreneurs must continue to navigate state-level requirements while also preparing for potential federal governance frameworks that may emerge during the moratorium period.

The Act's enforcement mechanisms and penalties remain largely undefined, creating additional uncertainty about how the moratorium would be implemented and enforced in practice. Without clear federal enforcement authority or penalties for state non-compliance, the moratorium's effectiveness may depend largely on voluntary state cooperation and federal political pressure rather than legal compulsion. This enforcement uncertainty compounds the challenges facing health tech entrepreneurs who must make strategic decisions about product development, market entry, and compliance investments without clear guidance about the regulatory environment they will face.

The Current State Regulatory Landscape: A Fragmented Foundation

The existing patchwork of state AI regulations in healthcare represents one of the most complex and fragmented regulatory environments in modern American commerce. For health tech entrepreneurs, this fragmentation has created a landscape where successful multi-state operations require navigation of dozens of different regulatory frameworks, each with unique requirements, enforcement mechanisms, and compliance timelines. Understanding this current environment is essential for appreciating both the potential benefits and risks associated with OBBBA's proposed federal intervention.

California has emerged as the most aggressive state regulator of healthcare AI, with multiple pieces of legislation targeting different aspects of AI deployment in clinical settings. Assembly Bill 3030 represents a particularly significant intervention in the doctor-patient relationship by mandating disclosure requirements when generative AI is used to communicate clinical information to patients. This requirement reflects growing concerns about patient autonomy and informed consent in an era of increasing AI integration, but it also creates significant compliance burdens for health tech companies developing patient-facing AI applications. The law's requirement that patients be informed of how to reach human providers further reflects skepticism about AI's ability to fully replace human clinical judgment, creating potential barriers to AI adoption in telehealth and remote monitoring applications.

California's Senate Bill 1120 takes a different approach by focusing specifically on health insurance applications of AI, prohibiting insurers from using AI to deny coverage without sufficient human oversight. This legislation reflects broader concerns about algorithmic bias and discrimination in healthcare financing, but it also creates significant technical challenges for health tech companies developing AI-powered utilization management and claims processing systems. The requirement for "sufficient human oversight" remains poorly defined, creating uncertainty about what level of human involvement satisfies the statutory requirement and potentially discouraging innovation in automated claims processing and prior authorization systems.

The Colorado Artificial Intelligence Act represents perhaps the most comprehensive state-level approach to AI regulation, establishing a framework that regulates both developers and deployers of AI systems with particular attention to "high-risk" applications. The Act's risk-based approach reflects sophisticated understanding of AI's varied applications and potential harms, but it also creates complex compliance requirements that may be particularly burdensome for smaller health tech companies. The law's focus on algorithmic accountability and bias testing aligns with emerging best practices in responsible AI development, but the specific technical requirements and assessment methodologies remain subject to ongoing regulatory interpretation.

Utah's Artificial Intelligence Policy Act takes a disclosure-focused approach similar to California's AB 3030 but extends the requirement to all regulated occupations, including healthcare professionals. This broad application creates significant compliance challenges for health tech companies whose products are used across multiple professional contexts, requiring different disclosure approaches for different user categories. The law's requirement for "prominent disclosure at the beginning of any communication" creates particular challenges for AI applications that involve ongoing or iterative interactions with patients, potentially disrupting the natural flow of clinical communication.

Massachusetts Bill S.46 represents an emerging trend toward comprehensive AI disclosure requirements in healthcare settings, proposing to require healthcare providers to disclose AI use in any decisions affecting patient care. This broad requirement would capture virtually all clinical decision support applications, from simple risk calculators to sophisticated diagnostic AI systems. The legislation reflects growing recognition that patients have legitimate interests in understanding how AI influences their care, but it also creates potential barriers to AI adoption by making disclosure requirements so extensive that they may discourage clinical integration.

These state-level initiatives reflect different philosophical approaches to AI governance, ranging from California's precautionary principle to Utah's transparency-focused framework. However, they share common themes that reveal underlying concerns about AI deployment in healthcare settings. Patient autonomy and informed consent emerge as central themes across multiple jurisdictions, reflecting recognition that healthcare AI applications raise unique concerns about patient agency and decision-making authority. Algorithmic accountability and bias prevention represent another common focus, acknowledging that AI systems may perpetuate or amplify existing healthcare disparities if not properly designed and monitored.

The enforcement mechanisms for these state regulations vary significantly, creating additional complexity for health tech entrepreneurs. Some states rely primarily on professional licensing board enforcement, while others establish dedicated AI oversight authorities or expand existing consumer protection agencies' jurisdictions. These different enforcement approaches create varying levels of legal risk and compliance uncertainty, making it difficult for companies to develop consistent compliance strategies across multiple jurisdictions.

The fragmented nature of state AI regulation has created particular challenges for health tech companies seeking to scale rapidly across multiple markets. The need to customize products and compliance approaches for different state requirements increases development costs and slows time-to-market, potentially disadvantaging innovative startups relative to larger companies with greater regulatory resources. This fragmentation may also discourage venture capital investment in health tech startups by increasing regulatory risk and reducing the predictability of compliance costs.

Implications for Health Tech Innovation and Market Dynamics

The proposed federal moratorium on state AI legislation would fundamentally alter the competitive landscape for health tech innovation, creating new opportunities for market expansion while potentially exposing companies to different forms of regulatory risk. For entrepreneurs operating in this space, understanding these shifted dynamics is crucial for strategic planning and competitive positioning over the next decade.

The elimination of state-by-state compliance requirements represents perhaps the most immediate and tangible benefit for health tech companies under the proposed moratorium. Currently, companies seeking to operate across multiple states must invest significant resources in legal analysis, product customization, and ongoing compliance monitoring to ensure adherence to varying state requirements. A federal moratorium would eliminate these costs, potentially reducing regulatory compliance expenses by sixty to eighty percent for companies operating in multiple jurisdictions. These cost savings could be reinvested in research and development, accelerating innovation cycles and enabling smaller companies to compete more effectively with larger organizations that have traditionally benefited from greater regulatory resources.

The standardization of regulatory requirements across states would also accelerate product development cycles by eliminating the need to design different product variants for different jurisdictions. Health tech companies could develop single product configurations that meet federal requirements rather than maintaining multiple versions customized for different state regulatory frameworks. This standardization effect would be particularly beneficial for companies developing AI-powered medical devices, clinical decision support systems, and population health management platforms that currently require significant customization to meet varying state disclosure, oversight, and documentation requirements.

Market entry barriers would be substantially reduced under a federal moratorium, potentially democratizing access to healthcare AI markets for smaller companies and international competitors. Currently, the complexity and cost of navigating multiple state regulatory frameworks create significant barriers to entry that favor established companies with existing compliance infrastructure. A unified federal framework would level the playing field by reducing the regulatory expertise and resources required for market entry, potentially increasing competition and innovation in healthcare AI applications.

However, the moratorium could also create new forms of competitive disadvantage for companies that have invested heavily in state-level compliance capabilities. Organizations that have developed sophisticated compliance frameworks and regulatory expertise around existing state requirements may find these investments devalued under a federal system. This dynamic could particularly affect larger health tech companies and established healthcare organizations that have built competitive advantages around their ability to navigate complex state regulatory environments.

The shift from state to federal regulatory authority could also alter innovation incentives in unexpected ways. State-level regulation has historically served as a testing ground for new regulatory approaches, allowing different jurisdictions to experiment with various governance frameworks and providing valuable learning opportunities for both regulators and industry participants. A federal moratorium would eliminate this regulatory experimentation, potentially slowing the development of effective AI governance approaches and reducing the overall sophistication of regulatory frameworks over time.

The moratorium's impact on venture capital and private equity investment in health tech represents another critical consideration for entrepreneurs. The current fragmented regulatory environment has created uncertainty that may discourage some investors, particularly those focused on early-stage companies that lack the resources to navigate complex compliance requirements. A federal moratorium could reduce this uncertainty and attract additional investment capital to the sector by providing greater predictability about regulatory requirements and compliance costs.

However, the moratorium could also create new forms of investment risk by concentrating regulatory authority at the federal level. Federal regulatory changes could have more dramatic and immediate impacts on entire industries under a unified system, potentially increasing the systemic risk associated with health tech investments. Investors may need to develop different risk assessment frameworks and due diligence approaches to account for this shifted regulatory dynamic.

The international competitiveness implications of the moratorium are particularly significant given the global nature of healthcare AI development. The European Union's comprehensive AI Act and similar initiatives in other jurisdictions have created regulatory frameworks that may be more restrictive than current U.S. state-level requirements. A federal moratorium that reduces regulatory requirements could provide U.S. health tech companies with competitive advantages in global markets by enabling faster development cycles and lower compliance costs. However, it could also create challenges for companies seeking to operate in international markets that maintain more stringent AI governance frameworks.

The moratorium's impact on clinical adoption of AI technologies represents perhaps the most important consideration for health tech entrepreneurs. Healthcare providers have historically been conservative adopters of new technologies, particularly those that affect clinical decision-making or patient safety. The regulatory uncertainty created by a federal moratorium could either accelerate adoption by reducing compliance complexity or slow adoption by creating concerns about adequate oversight and patient protection.

Patient Safety and Trust: The Double-Edged Sword of Deregulation

The intersection of AI regulation and patient safety represents one of the most critical considerations in evaluating OBBBA's proposed moratorium. For health tech entrepreneurs, understanding how regulatory changes might affect patient trust and clinical adoption is essential for predicting market dynamics and developing appropriate product strategies. The relationship between regulation and patient safety in healthcare AI applications is complex and multifaceted, involving technical, ethical, and psychological dimensions that extend far beyond traditional regulatory compliance considerations.

Patient trust in healthcare AI systems has been built partly on the foundation of regulatory oversight that provides assurance about safety, efficacy, and appropriate use. State-level AI regulations, despite their fragmented nature, have served important signaling functions by demonstrating governmental commitment to protecting patients from potential AI-related harms. Requirements for disclosure, human oversight, and algorithmic accountability have helped establish public confidence that AI deployment in healthcare settings occurs within appropriate guardrails designed to protect patient interests.

The proposed federal moratorium could undermine this trust-building function by eliminating many existing protective measures without providing clear federal alternatives. Patients who have become accustomed to disclosure requirements when AI influences their care may interpret the elimination of these requirements as a reduction in protection rather than a simplification of regulatory frameworks. This perception could create resistance to AI-enabled healthcare services and slow adoption of beneficial technologies, ultimately harming both patients and health tech companies.

The trust implications are particularly significant for vulnerable patient populations who may already harbor concerns about healthcare equity and algorithmic bias. Communities that have experienced historical healthcare disparities may view the elimination of state-level AI protections as evidence that their interests are being subordinated to commercial considerations. Health tech entrepreneurs developing AI applications for underserved populations must therefore consider how regulatory changes might affect community acceptance and clinical adoption in these critical market segments.

However, the current fragmented regulatory environment may also undermine patient safety by creating inconsistent protection levels across different jurisdictions. Patients receiving care in states with minimal AI regulation may be exposed to different levels of risk than those in states with comprehensive oversight requirements. A federal moratorium could potentially improve patient safety by creating opportunities for comprehensive federal regulation that provides consistent protection across all jurisdictions, assuming that appropriate federal frameworks are developed and implemented during the moratorium period.

The elimination of state disclosure requirements could have particularly complex effects on patient autonomy and informed consent. While excessive disclosure requirements may burden clinical workflows and potentially overwhelm patients with technical information, the complete elimination of disclosure obligations could deny patients information they need to make informed decisions about their care. Health tech entrepreneurs must consider how to maintain appropriate transparency and patient communication even in the absence of specific regulatory requirements, both for ethical reasons and to maintain patient trust and clinical adoption.

The moratorium's impact on clinical liability and malpractice considerations represents another crucial patient safety dimension. Healthcare providers using AI systems currently operate within legal frameworks that include both regulatory compliance requirements and professional liability standards. The elimination of state regulatory requirements could create uncertainty about professional liability standards and potentially expose both providers and patients to increased legal risk. Health tech companies may need to provide additional support to clinical users to help them navigate these uncertain liability landscapes.

The role of professional medical organizations and clinical specialty societies becomes particularly important in a reduced regulatory environment. These organizations may need to step into regulatory voids by developing professional standards, best practice guidelines, and certification requirements that help maintain patient safety even in the absence of formal governmental oversight. Health tech entrepreneurs should anticipate increased importance of professional organization endorsements and compliance with professional standards as alternatives to regulatory compliance in building market credibility and clinical adoption.

The psychological dimensions of patient trust in AI systems extend beyond regulatory considerations to encompass broader concerns about human agency, technological control, and the medicalization of artificial intelligence. Patients may worry that reduced regulatory oversight signals a shift toward less humanistic healthcare delivery models that prioritize efficiency over empathy and technological solutions over human judgment. Health tech entrepreneurs must address these concerns through product design, marketing approaches, and clinical support strategies that emphasize the complementary rather than replacement relationship between AI and human healthcare providers.

The international implications of reduced U.S. AI regulation for patient safety deserve particular attention given the global nature of healthcare AI development and deployment. If the United States adopts significantly more permissive AI governance frameworks than other developed nations, American patients might receive less protection than their international counterparts. This disparity could create competitive disadvantages for U.S. health tech companies seeking to operate in international markets with more stringent requirements while potentially exposing American patients to technologies that would not be permitted in other jurisdictions.

Quality assurance and post-market surveillance represent critical patient safety considerations that may be affected by the proposed moratorium. State regulations often include requirements for ongoing monitoring, adverse event reporting, and quality improvement processes that help identify and address AI-related safety issues after deployment. The elimination of these requirements could reduce the healthcare system's ability to detect and respond to emerging safety concerns, potentially exposing patients to avoidable risks.

Competitive Advantage in a Unified Regulatory Environment

The transition from a fragmented state-based regulatory system to a unified federal framework would fundamentally alter competitive dynamics in the healthcare AI sector, creating new opportunities for market differentiation while potentially commoditizing regulatory compliance as a competitive advantage. For health tech entrepreneurs, understanding these shifted competitive dynamics is crucial for strategic positioning and long-term business planning in an evolving regulatory landscape.

Under the current fragmented system, regulatory expertise and compliance capabilities have become significant sources of competitive advantage, particularly for companies seeking to operate across multiple states. Organizations that have invested in sophisticated legal teams, compliance management systems, and regulatory monitoring capabilities enjoy substantial advantages over competitors lacking these resources. The proposed federal moratorium would largely eliminate these advantages by standardizing regulatory requirements across jurisdictions, potentially democratizing access to healthcare AI markets and intensifying competition among technology providers.

The elimination of state-by-state compliance requirements would particularly benefit smaller health tech companies and startups that currently struggle to compete with larger organizations possessing greater regulatory resources. These smaller companies could redirect compliance investments toward research and development, product innovation, and market expansion activities that more directly contribute to competitive positioning. This dynamic could accelerate innovation by enabling more companies to compete effectively in healthcare AI markets, potentially leading to faster technological advancement and greater diversity of available solutions.

However, the regulatory standardization effect could also create new forms of competitive advantage based on different capabilities and market positioning strategies. Companies that excel at federal regulatory navigation, clinical validation, and evidence generation might gain advantages over competitors that have historically relied on state-level compliance expertise. The shift toward federal oversight could also increase the importance of relationships with federal agencies, clinical research organizations, and national healthcare networks relative to state-level stakeholder relationships.

The moratorium's impact on first-mover advantages represents a particularly important consideration for health tech entrepreneurs developing novel AI applications. Under the current system, companies that successfully navigate complex state regulatory requirements for innovative technologies often enjoy extended periods of market exclusivity while competitors struggle to achieve similar compliance capabilities. A simplified federal framework could reduce these first-mover advantages by making it easier for competitors to enter markets quickly after initial innovation occurs.

International competitiveness implications of the moratorium extend beyond simple regulatory burden reduction to encompass fundamental questions about innovation incentives and market positioning strategies. A more permissive U.S. regulatory environment could attract international health tech companies to develop and test AI technologies in American markets, potentially increasing competition for domestic companies. Conversely, reduced regulatory requirements could enable U.S. companies to develop technologies more rapidly and at lower cost than international competitors operating under more restrictive frameworks.

The role of clinical validation and evidence generation could become increasingly important as sources of competitive advantage in a less regulated environment. While regulatory compliance has historically served as a quality signal for healthcare AI products, the elimination of diverse state requirements could shift market emphasis toward clinical evidence, peer-reviewed research, and real-world outcomes data as primary differentiators among competing solutions. Companies that excel at generating and communicating clinical evidence might gain significant advantages over competitors that have historically relied primarily on regulatory compliance for market credibility.

Partnership strategies and ecosystem development could also become more important competitive differentiators in a unified regulatory environment. Companies that build strong relationships with healthcare providers, payer organizations, and clinical research institutions might enjoy sustainable advantages over competitors that focus primarily on technology development. The elimination of state-specific compliance requirements could enable companies to invest more resources in building these strategic partnerships and developing comprehensive ecosystem approaches to market penetration.

The moratorium could also affect competitive dynamics around data access and utilization, which represent increasingly important sources of advantage in healthcare AI applications. Companies that have historically invested heavily in state-specific compliance capabilities might redirect these resources toward data acquisition, curation, and utilization strategies that provide more sustainable competitive advantages. Access to diverse, high-quality healthcare datasets could become an even more critical differentiator as regulatory barriers to entry diminish.

Intellectual property strategies might also evolve in response to changing competitive dynamics under a federal moratorium. Companies that have historically relied on regulatory complexity as a form of competitive moat might need to develop stronger patent portfolios, trade secret protection strategies, and technology differentiation approaches to maintain market advantages. The democratization of regulatory access could increase the importance of traditional intellectual property protections as sources of sustainable competitive advantage.

The implications for venture capital and private equity investment strategies in healthcare AI represent another crucial competitive consideration. Investors might shift focus from companies with strong regulatory compliance capabilities toward those with superior technology platforms, clinical evidence generation capabilities, and market penetration strategies. This investment reallocation could affect which types of health tech companies receive funding and how they position themselves for growth and market expansion.

Strategic Positioning for Health Tech Entrepreneurs

The potential implementation of OBBBA's AI moratorium requires health tech entrepreneurs to fundamentally reconsider their strategic positioning and operational approaches across multiple dimensions of their businesses. The transition from a state-fragmented to a federally unified regulatory environment presents both unprecedented opportunities and significant risks that demand careful strategic planning and tactical adaptation.

Product development strategies must evolve to account for the elimination of state-specific customization requirements while preparing for potential federal standards that may emerge during the moratorium period. Health tech companies should consider developing modular product architectures that can be quickly adapted to accommodate new federal requirements without requiring complete product redesigns. This approach provides flexibility to respond to regulatory changes while avoiding over-investment in compliance features that may become obsolete under new federal frameworks.

The timing of product launches and market entry strategies becomes particularly critical in a transitional regulatory environment. Companies with products currently held back by complex state compliance requirements might accelerate their go-to-market timelines to capitalize on reduced regulatory barriers. However, entrepreneurs must balance speed-to-market advantages against the risks of launching products before federal standards are clearly established, which could require costly retrofitting or repositioning if federal requirements differ from current state standards.

Clinical validation and evidence generation strategies should be strengthened regardless of regulatory requirements, as these capabilities will likely become more important for market differentiation in a less regulated environment. Health tech entrepreneurs should invest in robust clinical trial capabilities, real-world evidence generation systems, and peer-reviewed research programs that demonstrate the safety and efficacy of their technologies independent of regulatory compliance. These evidence-based approaches not only support market acceptance but also provide valuable preparation for potential federal oversight requirements.

Partnership and alliance strategies require reconsideration in light of changed competitive dynamics and market access patterns. Companies should evaluate whether existing state-focused partnerships remain optimal under a federal system and consider developing relationships with national healthcare networks, federal agencies, and multi-state provider organizations. Strategic alliances with established healthcare organizations might become more valuable as regulatory barriers to entry diminish and competition intensifies.

Financial planning and capital allocation strategies must account for both the potential cost savings from reduced regulatory compliance and the need for continued investment in areas that support competitive positioning. Companies should develop detailed financial models that quantify the expected savings from eliminated state compliance requirements and identify optimal reallocation strategies for these resources. Potential areas for increased investment include clinical validation, technology development, market expansion, and federal regulatory preparation.

Risk management strategies need comprehensive updating to address the new risk profile created by a federal moratorium. While state regulatory compliance risks would be largely eliminated, new risks emerge around federal regulatory uncertainty, competitive pressure from increased market access, and potential patient safety concerns in a less regulated environment. Companies should develop risk assessment frameworks that account for these shifted risk profiles and establish appropriate mitigation strategies.

Intellectual property strategies become increasingly important as traditional regulatory barriers to competition diminish. Health tech entrepreneurs should strengthen their patent portfolios, trade secret protection programs, and technology differentiation approaches to maintain competitive advantages that may no longer be protected by regulatory complexity. This includes both defensive patent strategies to protect existing innovations and offensive approaches to establish market barriers against new competitors.

Talent acquisition and organizational development strategies should reflect the changing skill sets required for success in a federally regulated environment. Companies may need fewer state regulatory compliance specialists but more federal regulatory experts, clinical validation professionals, and competitive intelligence capabilities. The democratization of market access could also increase demand for business development, partnership management, and market expansion expertise.

Marketing and positioning strategies must address potential changes in customer decision-making processes and evaluation criteria under a federal system. Healthcare providers and payer organizations might shift their vendor evaluation approaches as regulatory compliance becomes less differentiating among competing solutions. Companies should develop marketing strategies that emphasize clinical outcomes, operational efficiency, and technological superiority rather than regulatory compliance capabilities.

International expansion strategies could become more attractive under a simplified domestic regulatory framework that frees resources for international market development. Health tech entrepreneurs should evaluate opportunities to leverage reduced domestic compliance costs to support expansion into international markets, while considering how different global regulatory approaches might affect their competitive positioning.

Scenario planning becomes particularly important given the uncertainty surrounding the moratorium's ultimate implementation and the potential for significant federal regulatory developments during the moratorium period. Companies should develop multiple strategic scenarios based on different possible outcomes, including successful moratorium implementation with minimal federal replacement regulation, moratorium implementation followed by comprehensive federal standards, and moratorium failure with continued state-level regulation.

Long-term strategic planning must account for the temporary nature of the proposed ten-year moratorium and the likelihood that some form of federal AI regulation will eventually emerge. Health tech entrepreneurs should position their companies to influence the development of future federal standards while building capabilities that will remain valuable regardless of the ultimate regulatory framework. This includes investing in ethical AI development practices, algorithmic accountability systems, and patient safety monitoring capabilities that represent best practices independent of specific regulatory requirements.

Constitutional and Legal Challenges: Navigating Uncertain Waters

The proposed federal moratorium on state AI legislation faces significant constitutional and legal obstacles that could affect its implementation, enforcement, and ultimate viability. For health tech entrepreneurs, understanding these legal challenges is crucial for assessing the probability of successful implementation and preparing for various potential outcomes that could affect strategic planning and business operations over the next decade.

The Tenth Amendment to the United States Constitution reserves to states all powers not explicitly granted to the federal government, including traditional police powers related to health and safety regulation. The proposed moratorium directly challenges this constitutional framework by preventing states from exercising their traditional authority to protect public health and safety through AI regulation. The fact that forty bipartisan state attorneys general have already raised constitutional concerns suggests that legal challenges to the moratorium are not only likely but may enjoy broad political support across traditional partisan divides.

The constitutional doctrine of federal preemption provides the legal framework through which OBBBA's moratorium would operate, but the application of preemption principles to AI regulation presents novel legal questions that have not been definitively resolved by federal courts. Traditional preemption analysis examines whether federal and state laws conflict in ways that make it impossible to comply with both, or whether federal regulation is so comprehensive that it demonstrates congressional intent to occupy the entire regulatory field. The proposed AI moratorium represents an unusual form of preemption that explicitly prevents state regulation without providing comprehensive federal alternatives, creating potential constitutional vulnerabilities.

The Commerce Clause of the Constitution provides Congress with broad authority to regulate interstate commerce, which could support federal preemption of state AI regulations that affect interstate business operations. However, healthcare regulation has traditionally been considered a state responsibility under the constitutional framework, and courts have historically been reluctant to find that federal commerce power completely displaces state health and safety authority. The moratorium's broad application to healthcare AI could face particular constitutional scrutiny given the traditional state role in healthcare regulation.

The legal challenges to OBBBA's moratorium are likely to focus on several specific constitutional and statutory arguments that could affect both the timing and scope of its implementation. State attorneys general may argue that the moratorium exceeds federal constitutional authority by improperly interfering with state police powers, particularly in areas like healthcare regulation where states have historically maintained primary responsibility. These arguments could lead to preliminary injunctions that prevent implementation of the moratorium while legal challenges proceed through federal courts.

The procedural hurdles facing the moratorium's implementation extend beyond constitutional challenges to include practical questions about enforcement mechanisms and federal agency authority. The Act does not clearly specify which federal agencies would be responsible for enforcing the moratorium or what penalties would apply to states that continue to enforce prohibited AI regulations. This enforcement uncertainty creates potential for non-compliance by states that oppose the moratorium, particularly those that have invested significantly in developing AI regulatory frameworks.

The political dynamics surrounding the moratorium's legal challenges could significantly affect its ultimate success and implementation timeline. The bipartisan opposition from state attorneys general suggests that legal challenges might receive support from both Democratic and Republican-controlled states, potentially creating unusual political coalitions that could affect both litigation strategies and congressional support for the moratorium. Health tech entrepreneurs should monitor these political developments carefully, as they could affect both the likelihood of successful legal challenges and the potential for congressional modifications to the moratorium's provisions.

The relationship between the proposed moratorium and existing federal AI regulatory initiatives presents additional legal complexity that could affect implementation and enforcement. Various federal agencies, including the Department of Health and Human Services, the Food and Drug Administration, and the Federal Trade Commission, have already begun developing AI governance frameworks within their existing authorities. The moratorium's interaction with these existing federal initiatives remains unclear and could create jurisdictional conflicts that complicate implementation.

International law considerations could also affect the moratorium's implementation and effectiveness, particularly given the global nature of healthcare AI development and deployment. International trade agreements and bilateral cooperation frameworks may constrain the United States' ability to implement AI regulatory approaches that differ significantly from those adopted by major trading partners. Health tech entrepreneurs with international operations should consider how these international law constraints might affect the moratorium's implementation and their own compliance strategies.

The precedential implications of successful legal challenges to the moratorium could have lasting effects on federal-state relationships in technology regulation more broadly. A successful constitutional challenge could establish judicial precedents that limit federal authority to preempt state technology regulations, potentially affecting future federal initiatives in areas like data privacy, cybersecurity, and emerging technology governance. Conversely, successful defense of the moratorium could strengthen federal preemption authority and encourage similar approaches in other technology sectors.

The timeline for resolution of legal challenges represents a crucial consideration for health tech entrepreneurs seeking to make strategic decisions based on the moratorium's potential implementation. Constitutional challenges to federal legislation typically require several years to resolve through the federal court system, during which time uncertainty about the moratorium's ultimate validity could persist. Companies may need to develop contingency plans that account for various potential outcomes and different implementation timelines.

The role of federal district courts in different jurisdictions could affect both the strategy and timeline of legal challenges to the moratorium. State attorneys general are likely to file challenges in federal district courts that they believe are most favorable to their constitutional arguments, potentially creating a complex landscape of conflicting lower court decisions that could take years to resolve through appellate review. The forum shopping opportunities available to state attorneys general could result in initial victories for moratorium opponents in courts known for skepticism of federal preemption, creating temporary injunctions that delay implementation while appeals proceed through higher courts.

The evidentiary requirements for constitutional challenges to the moratorium present both opportunities and risks for health tech entrepreneurs who may be called upon to provide testimony or documentation about the practical effects of state AI regulations. Companies that have invested heavily in state compliance capabilities might serve as important witnesses in demonstrating the real-world impacts of regulatory fragmentation, while those that have struggled with compliance costs could provide evidence supporting the moratorium's benefits for innovation and competition. The role of industry testimony in these legal proceedings could significantly influence both the outcome of constitutional challenges and the public perception of the moratorium's effects on healthcare innovation.

The potential for emergency stays and preliminary injunctions during constitutional litigation creates additional uncertainty for health tech entrepreneurs attempting to plan product development and market entry strategies. Courts could issue temporary restraining orders that prevent moratorium implementation while constitutional challenges proceed, creating extended periods of regulatory uncertainty that complicate business planning and investment decisions. Companies may need to develop contingency plans that account for stop-and-start implementation scenarios where the moratorium's legal status changes multiple times during the litigation process.

Future Scenarios and Market Predictions

The next decade of healthcare AI regulation will likely unfold through one of several distinct scenarios, each carrying different implications for health tech entrepreneurs and market development. Understanding these potential futures is essential for strategic planning and risk management in an industry where regulatory changes can fundamentally alter competitive dynamics and market opportunities.

The most optimistic scenario for health tech entrepreneurs involves successful implementation of the moratorium followed by the development of streamlined federal AI standards that provide clear guidance while minimizing compliance burdens. Under this scenario, the elimination of state-level regulatory fragmentation would reduce compliance costs by sixty to eighty percent for multi-state operators, freeing significant resources for research and development investment. The healthcare AI market could experience accelerated growth as reduced regulatory barriers enable faster product development cycles and easier market entry for innovative companies.

This optimistic scenario would likely see the emergence of federal AI standards that focus on outcome-based regulation rather than prescriptive technical requirements, allowing companies flexibility in how they achieve safety and efficacy goals. Professional medical organizations and clinical specialty societies would play increasingly important roles in developing best practice guidelines and certification programs that complement federal oversight. The result could be a more dynamic and innovative healthcare AI ecosystem that maintains appropriate patient protections while enabling rapid technological advancement.

The federal regulatory framework that emerges under this scenario would likely emphasize performance standards and real-world outcomes rather than detailed technical specifications, giving health tech companies flexibility to innovate while meeting clear safety and efficacy requirements. This approach would favor companies with strong clinical validation capabilities and real-world evidence generation systems, potentially creating new competitive advantages for organizations that excel at demonstrating clinical value. The healthcare AI market could see consolidation around companies that combine technological innovation with clinical evidence excellence, creating a more mature and professionally oriented industry structure.

A moderate scenario involves successful moratorium implementation but delayed or incomplete development of comprehensive federal standards, creating a regulatory vacuum that persists for several years. Under this scenario, market forces and professional standards would largely fill the regulatory void, with healthcare providers, payer organizations, and professional societies developing their own AI governance frameworks. This self-regulation approach could maintain innovation incentives while addressing patient safety concerns, but it might also create new forms of market fragmentation as different healthcare organizations adopt different standards.

The moderate scenario would likely see increased importance of industry consortiums, standards organizations, and professional certification programs as alternatives to formal regulatory oversight. Health tech entrepreneurs would need to navigate these voluntary frameworks while preparing for eventual federal regulation that could differ significantly from current state approaches. Market consolidation might accelerate as larger organizations with greater resources for self-regulation gain advantages over smaller companies struggling to meet voluntary standards.

Professional liability and malpractice considerations would become increasingly important under this moderate scenario, as healthcare providers seek protection through professional standards and industry best practices in the absence of clear regulatory requirements. Health tech companies might need to provide more extensive indemnification and professional liability support to clinical users, potentially increasing costs and affecting product pricing strategies. The role of professional medical organizations in establishing clinical practice guidelines for AI use would expand significantly, creating new gatekeepers for market acceptance that health tech entrepreneurs must engage effectively.

A pessimistic scenario for the moratorium involves successful legal challenges that prevent implementation or result in significantly narrowed scope that fails to achieve the intended regulatory simplification. Under this scenario, the current fragmented state regulatory environment would persist and potentially intensify as states respond to federal preemption attempts by developing more sophisticated and restrictive AI governance frameworks. The resulting regulatory complexity could significantly burden health tech innovation while failing to provide the patient protection benefits that justify regulatory intervention.

This pessimistic scenario might see the emergence of even more complex compliance requirements as states compete to demonstrate their commitment to patient protection in the face of federal preemption attempts. Interstate commerce in healthcare AI could become increasingly difficult as states develop incompatible regulatory approaches that effectively balkanize the national market. Innovation could slow significantly as compliance costs consume increasing portions of company resources and regulatory uncertainty discourages venture capital investment.

The competitive implications of this pessimistic scenario would particularly disadvantage smaller health tech companies that lack the resources to navigate increasingly complex state regulatory frameworks. Larger companies with dedicated regulatory teams might gain relative advantages, potentially leading to market consolidation that reduces innovation and limits entrepreneurial opportunities. The resulting regulatory environment could create barriers to entry that protect established players while discouraging new market entrants, ultimately slowing the pace of healthcare AI advancement.

An alternative scenario involves partial moratorium implementation that affects only certain types of AI applications or certain aspects of state regulation, creating a hybrid federal-state system that maintains complexity while providing some benefits of standardization. This outcome might result from congressional compromises designed to address constitutional concerns or from court decisions that uphold some aspects of the moratorium while striking down others. Health tech entrepreneurs would face continued complexity in navigating mixed regulatory frameworks while potentially benefiting from reduced requirements in certain application areas.

This hybrid scenario could create particularly complex compliance challenges as companies attempt to determine which regulatory frameworks apply to different aspects of their products and operations. The boundaries between federal and state authority could become contentious areas requiring ongoing legal interpretation and potentially creating liability exposure for companies that incorrectly assess their regulatory obligations. Market fragmentation might persist in areas where state authority is preserved while standardization occurs in federally preempted domains.

The international competitiveness implications of these different scenarios vary significantly and could affect the long-term viability of U.S. health tech companies in global markets. A successful moratorium that reduces regulatory burdens could provide competitive advantages for U.S. companies seeking to develop and deploy AI technologies more rapidly than international competitors operating under more restrictive frameworks. However, reduced regulatory standards could also create disadvantages in international markets that maintain stricter requirements and view U.S. products as inadequately tested or regulated.

European Union markets, governed by the comprehensive EU AI Act, might become increasingly difficult for U.S. health tech companies to enter if American regulatory standards diverge significantly from European requirements. Companies that develop products under a permissive U.S. regulatory framework might find themselves unable to meet European compliance requirements without significant product modifications and additional clinical validation. This dynamic could force health tech entrepreneurs to choose between optimizing for domestic or international markets, potentially limiting global growth opportunities.

The role of technological advancement in shaping these scenarios deserves particular attention, as rapid developments in AI capabilities could outpace regulatory frameworks regardless of whether state or federal approaches predominate. Breakthrough developments in areas like artificial general intelligence, quantum computing, or brain-computer interfaces could render current regulatory debates obsolete while creating entirely new governance challenges that neither state nor federal authorities are prepared to address.

The emergence of foundation models and large language models specifically trained for healthcare applications could accelerate this technological disruption by enabling capabilities that current regulatory frameworks were not designed to address. Health tech entrepreneurs working with these advanced technologies might find themselves operating in regulatory gray areas regardless of the moratorium's fate, requiring proactive engagement with regulators and professional organizations to help shape appropriate governance approaches.

Patient and provider acceptance of different regulatory scenarios represents another crucial variable that could affect market development independent of formal regulatory requirements. Healthcare providers who are comfortable with current state regulatory frameworks might resist AI technologies that no longer meet familiar standards, even if federal authorities approve their use. Conversely, providers frustrated by current compliance complexity might embrace federal standardization even if it involves some reduction in patient protection measures.

The trust implications of different regulatory scenarios could have lasting effects on healthcare AI adoption rates and market development. Patients who have come to expect certain disclosure and oversight requirements might view their elimination as evidence of reduced protection, potentially creating resistance to AI-enabled healthcare services. Health tech entrepreneurs must consider how to maintain patient trust and clinical acceptance even in scenarios where formal regulatory requirements are reduced.

The venture capital and private equity implications of these different scenarios could significantly affect the availability of funding for health tech innovation over the next decade. A successful moratorium that reduces regulatory uncertainty might attract increased investment capital to the sector, while continued fragmentation or legal uncertainty could discourage investment and slow innovation. International investment patterns might also shift as investors seek regulatory environments that provide optimal combinations of market access and regulatory predictability.

The geographic distribution of venture capital investment in healthcare AI could change significantly depending on which regulatory scenario emerges. States that maintain strong AI regulatory frameworks might see reduced investment activity if companies and investors prefer jurisdictions with minimal regulatory requirements. Conversely, states that position themselves as AI innovation hubs through supportive regulatory approaches might attract increased investment and talent, creating regional competitive advantages that persist beyond the moratorium period.

Conclusion: Charting a Course Through Regulatory Transformation

The One Big Beautiful Bill Act's proposed moratorium on state AI legislation represents a watershed moment for healthcare technology entrepreneurs, presenting both unprecedented opportunities and significant challenges that will reshape the industry landscape for the next decade. The narrow 215-214 House passage of OBBBA demonstrates the contentious nature of this regulatory transformation, while the breadth of opposition from forty bipartisan state attorneys general signals the constitutional and political obstacles that lie ahead.

For health tech entrepreneurs, the strategic imperative is clear: prepare for multiple scenarios while positioning companies to thrive regardless of the ultimate regulatory outcome. The potential elimination of state-by-state compliance requirements could reduce regulatory costs by sixty to eighty percent for multi-state operators, freeing substantial resources for innovation and market expansion. However, this regulatory simplification comes with the risk of reduced patient protections and potential erosion of public trust in healthcare AI applications.

The most successful health tech companies over the next decade will be those that recognize regulatory compliance as just one component of a comprehensive approach to responsible AI development and deployment. Clinical validation, evidence generation, ethical AI development practices, and patient safety monitoring will become increasingly important competitive differentiators as regulatory barriers to entry diminish. Companies that invest in these capabilities will be better positioned to maintain market credibility and clinical adoption regardless of the specific regulatory framework that ultimately emerges.

The constitutional and legal challenges facing the moratorium create significant uncertainty about implementation timelines and ultimate scope, requiring health tech entrepreneurs to develop adaptive strategies that can respond to various potential outcomes. Companies should prepare for scenarios ranging from complete moratorium success with minimal federal replacement regulation to continued state-level fragmentation with intensified complexity. This preparation should include financial modeling, product development strategies, and partnership approaches that remain viable across different regulatory environments.

The international competitiveness implications of the moratorium extend beyond domestic market considerations to encompass fundamental questions about the United States' role in global healthcare AI leadership. A more permissive regulatory environment could attract international investment and talent while enabling U.S. companies to develop technologies more rapidly than international competitors. However, reduced regulatory standards could also create disadvantages in international markets and potentially expose American patients to technologies that would not be permitted in other developed nations.

The patient safety and trust implications of regulatory change demand particular attention from health tech entrepreneurs who must balance commercial opportunities with ethical responsibilities. The healthcare sector's unique characteristics, including information asymmetries between providers and patients, life-and-death consequences of technological failures, and historical healthcare disparities, create special obligations for companies developing AI applications in clinical settings. Maintaining patient trust and clinical adoption will require continued commitment to transparency, safety, and ethical development practices even in the absence of specific regulatory requirements.

The evolving role of professional medical organizations, clinical specialty societies, and industry consortiums in AI governance presents both opportunities and challenges for health tech entrepreneurs. These organizations may become increasingly important sources of standards, best practices, and market credibility as formal regulatory oversight potentially diminishes. Companies that actively engage with these professional communities and contribute to the development of voluntary standards will be better positioned to influence emerging governance frameworks and maintain market acceptance.

The venture capital and private equity implications of regulatory transformation could significantly affect the availability of funding for health tech innovation over the next decade. Reduced regulatory uncertainty might attract increased investment capital to the sector, while the democratization of market access could intensify competition for funding among a larger pool of viable companies. Health tech entrepreneurs should prepare for potentially changed investment dynamics and develop value propositions that emphasize technological differentiation and clinical outcomes rather than regulatory compliance capabilities.

Looking ahead, the next decade will likely see continued evolution in healthcare AI governance regardless of the moratorium's ultimate fate. Technological advancement will continue to outpace regulatory development, creating new challenges and opportunities that require adaptive approaches from both entrepreneurs and regulators. The companies that succeed will be those that view regulatory change as an opportunity to strengthen their competitive positioning rather than simply reduce compliance costs.

The broader implications of this regulatory transformation extend beyond individual company strategies to encompass fundamental questions about the appropriate balance between innovation and protection in healthcare technology governance. The healthcare AI sector serves as a crucial testing ground for broader questions about artificial intelligence regulation that will affect virtually every aspect of human society over the coming decades. Health tech entrepreneurs have both the opportunity and responsibility to demonstrate that technological innovation and patient protection can be pursued simultaneously through thoughtful product development, ethical business practices, and meaningful engagement with stakeholders across the healthcare ecosystem.

Success in this transformed regulatory environment will require health tech entrepreneurs to embrace expanded definitions of corporate responsibility that extend beyond traditional compliance obligations to encompass proactive patient safety monitoring, algorithmic accountability, and transparent communication about AI capabilities and limitations. The companies that recognize these expanded responsibilities as competitive advantages rather than burdens will be best positioned to build sustainable market leadership in an era of regulatory transformation.

The One Big Beautiful Bill Act's AI moratorium proposal marks not an endpoint but a beginning of a new chapter in healthcare AI governance. Health tech entrepreneurs who approach this transition with strategic foresight, ethical commitment, and adaptive capabilities will find unprecedented opportunities to build companies that advance both technological innovation and human health. The regulatory storm ahead presents challenges, but it also offers the possibility of emerging into clearer skies with stronger, more capable organizations ready to lead the next generation of healthcare transformation.

The transformative potential of this regulatory shift cannot be overstated. For the first time in the modern era of healthcare technology, entrepreneurs may have the opportunity to operate within a truly unified national framework that prioritizes innovation while maintaining essential patient protections. This represents a fundamental departure from the current environment where success often depends as much on regulatory navigation capabilities as on technological innovation. The democratization of market access that could result from federal standardization may unleash a wave of innovation from smaller companies and individual entrepreneurs who have been excluded from healthcare AI markets by the complexity and cost of current compliance requirements.

However, this transformation also carries profound responsibilities that extend beyond traditional business considerations. Healthcare AI entrepreneurs will be operating in an environment where their decisions about product design, deployment strategies, and business practices may have limited regulatory oversight but maximum impact on patient welfare and public trust in healthcare technology. The absence of prescriptive regulatory requirements does not diminish the ethical obligations that come with developing technologies that affect human health and wellbeing. Indeed, it may heighten these obligations by placing greater responsibility on individual companies to self-regulate and maintain appropriate standards.

The legacy of this regulatory transformation will ultimately be determined not by the specific provisions of any particular legislation but by how the healthcare AI industry responds to the opportunities and responsibilities it creates. Companies that embrace the chance to demonstrate that innovation and patient protection can advance together will help establish a new paradigm for healthcare technology governance that could influence global approaches to AI regulation. Those that prioritize short-term commercial gains over long-term trust and safety considerations risk creating backlash that could result in more restrictive regulatory approaches in the future.

The healthcare AI sector stands at a crossroads where the decisions made over the next several years will shape the industry for decades to come. The proposed federal moratorium represents just one possible path forward, but regardless of its ultimate fate, the broader trend toward federal involvement in AI governance is likely to continue. Health tech entrepreneurs who position themselves as responsible stewards of this powerful technology while pursuing aggressive innovation and market expansion will be best prepared to thrive in whatever regulatory environment ultimately emerges.

The story of healthcare AI regulation is still being written, and health tech entrepreneurs have the opportunity to be authors rather than merely readers of this unfolding narrative. The choices made today about product development priorities, ethical frameworks, partnership strategies, and patient engagement approaches will determine not only individual company success but the entire industry's trajectory toward a future where artificial intelligence serves as a force for healing, equity, and human flourishing in healthcare. The regulatory transformation represented by OBBBA's AI moratorium proposal is not merely a policy change but a defining moment that will test the healthcare AI industry's commitment to balancing innovation with responsibility, competition with collaboration, and technological advancement with human values.