The American Healthcare Saga: From Medicine Men to Medicare

Introduction: A Tale of Three Transformations

Imagine, for a moment, three different Americans facing a medical crisis. The first, in 1723, relies on a combination of Native American herbal remedies and European bloodletting techniques. The second, in 1923, enters a newly built hospital where doctors are just beginning to understand germ theory and antibiotics. The third, in 2023, receives treatment guided by artificial intelligence, genetic testing, and robotic surgery. These three scenarios represent not just different eras of medical technology, but fundamentally different ways of thinking about health, society, and human rights.

The story of healthcare in the United States is not merely a chronicle of scientific advancement. It is a complex narrative of social evolution, cultural revolution, and economic transformation. Like other great revolutions in human history – the Agricultural Revolution, the Scientific Revolution, and the Industrial Revolution – the Healthcare Revolution has fundamentally altered how humans live, die, and understand their own existence.

This essay will explore how America transformed from a nation where most people were born and died in their own homes, attended only by family members and perhaps a local healer, to one where birth and death are highly medicalized events occurring in institutional settings. We will examine how healthcare evolved from a private matter between individuals to a complex web of institutions, regulations, and economic relationships that consume nearly one-fifth of the nation's GDP.

The Pre-Industrial Era: Medicine as Folk Wisdom

Before the United States existed as a nation, healthcare in colonial America was a fascinating blend of Native American traditional medicine, European medical practices, African healing traditions brought by enslaved peoples, and folk remedies passed down through generations. This period offers a striking example of how humans create and maintain intersubjective realities – shared beliefs and practices that exist purely in our collective imagination.

The medical practitioners of colonial America would seem strange to modern observers. They included not only physicians trained in European universities but also midwives, herb women, barber-surgeons, and various healers who combined physical treatments with spiritual practices. Their understanding of the human body was based on the ancient Greek theory of four humors: blood, phlegm, yellow bile, and black bile. Disease was seen as an imbalance of these humors, and treatments aimed to restore equilibrium through bloodletting, purging, or inducing vomiting.

This medical paradigm was neither more nor less "real" than our modern understanding of disease as the result of bacteria, viruses, or cellular dysfunction. Both are human attempts to create order from the chaos of illness and death. The key difference is that our modern paradigm has proved more successful at predicting and controlling health outcomes.

The First Healthcare Revolution: The Rise of Scientific Medicine

The first great transformation in American healthcare began in the mid-19th century with the advent of scientific medicine. This revolution was part of the broader Scientific Revolution that had begun in Europe centuries earlier but took on distinct characteristics in the American context.

The discovery of germ theory in the late 1800s marked a crucial turning point. Suddenly, diseases had specific, identifiable causes rather than being the result of miasmas or humoral imbalances. This new understanding led to dramatic improvements in public health through better sanitation and eventually to the development of vaccines and antibiotics.

But the scientific revolution in medicine brought more than new treatments. It fundamentally altered the social structure of healthcare delivery. The rise of hospitals as centers of medical care, the professionalization of nursing, and the establishment of standardized medical education all emerged during this period.

The 1910 Flexner Report, which evaluated and standardized medical education in the United States, exemplifies this transformation. The report led to the closure of many medical schools that didn't meet new scientific standards. While this improved the quality of medical education, it also had unintended consequences: it reduced the number of African American physicians and women in medicine, and it established a pattern of expensive, lengthy medical training that continues to influence healthcare costs today.

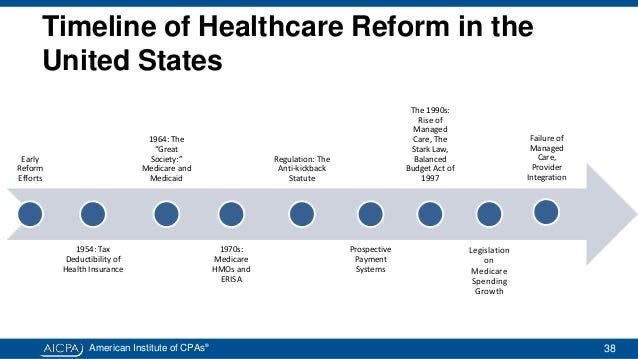

The Second Healthcare Revolution: The Rise of Insurance

The second great transformation in American healthcare wasn't about medicine at all – it was about money. The story of how healthcare became tied to employment in the United States is a perfect example of how historical accidents can shape social institutions for generations.

During World War II, wage controls prevented employers from competing for workers by offering higher salaries. Instead, they began offering health insurance as a benefit. This practice was encouraged by tax policies that made employer-provided health insurance tax-deductible for businesses and tax-free for employees.

This seemingly minor policy decision had enormous consequences. It created a system where most Americans received health insurance through their employers, making access to healthcare dependent on employment status. This system worked reasonably well during the post-war economic boom, when many Americans worked long-term for large corporations. However, it would prove increasingly problematic in the more dynamic, service-based economy that emerged later.

The creation of Medicare and Medicaid in 1965 represented another crucial development in this period. These programs acknowledged that some populations – the elderly and the poor – needed government assistance to access healthcare. But rather than creating a universal system, these programs carved out specific populations for coverage, reinforcing the fragmented nature of American healthcare.

The Third Healthcare Revolution: The Digital Transformation

The third great transformation in American healthcare began in the late 20th century and continues today. This revolution is characterized by three main developments: the rise of digital technology, the explosion of healthcare costs, and the growing recognition of healthcare as a human right.

Digital technology has transformed healthcare in ways that would seem magical to practitioners from a century ago. Electronic health records, while often criticized for their clunky interfaces, have created vast databases that enable population-level health research. Artificial intelligence can now diagnose certain conditions more accurately than human doctors. Telemedicine, accelerated by the COVID-19 pandemic, has made healthcare more accessible to many Americans.

However, these technological advances have come at a tremendous cost. Healthcare spending in the United States has grown from about 5% of GDP in 1960 to nearly 20% today. This explosion in costs reflects both the increasing capabilities of modern medicine and the administrative complexity of the American healthcare system.

The debate over healthcare as a human right represents another fundamental shift in how Americans think about healthcare. The Affordable Care Act of 2010, while falling short of universal coverage, established the principle that no American should be denied health insurance due to pre-existing conditions. This represents a significant shift from viewing healthcare as a private good to seeing it as a public right.

The Unique Features of American Healthcare

The American healthcare system has several distinctive features that set it apart from those of other developed nations:

First, it is extraordinarily expensive. Americans spend far more per capita on healthcare than citizens of any other nation, yet achieve worse health outcomes on many measures. This paradox reflects both the high costs of American medical care and the system's focus on treating disease rather than preventing it.

Second, it is remarkably fragmented. Unlike most developed nations, which have some form of universal healthcare system, the United States has multiple systems operating in parallel: employer-provided insurance, Medicare, Medicaid, the Veterans Health Administration, and various state-level programs. This fragmentation creates administrative complexity and reduces bargaining power with healthcare providers and pharmaceutical companies.

Third, it is highly innovative. Despite its inefficiencies, the American system has produced many of the world's most important medical advances. The combination of substantial research funding, both public and private, and a market-based system that rewards innovation has made the United States a leader in developing new treatments and technologies.

The Social Impact of Healthcare Evolution

The evolution of American healthcare has had profound effects on American society. Life expectancy increased dramatically during the 20th century, though it has recently shown troubling declines. Infant mortality has plummeted. Diseases that once killed millions, from smallpox to polio, have been eliminated or greatly reduced.

But these improvements haven't been distributed equally. Significant disparities in health outcomes persist along racial, ethnic, and socioeconomic lines. African Americans have shorter life expectancies than white Americans. Rural Americans have less access to healthcare than urban residents. Poor Americans often delay or forgo medical care due to cost concerns.

Healthcare has also become deeply intertwined with American politics and identity. Debates over healthcare reform reflect fundamental disagreements about the role of government, individual responsibility, and social solidarity. The COVID-19 pandemic highlighted how these divisions can affect public health responses and health outcomes.

The Economic Impact of Healthcare Evolution

The economic impact of healthcare evolution has been equally profound. Healthcare has become one of the largest sectors of the American economy, employing millions of people in roles ranging from doctors and nurses to insurance claims processors and medical device manufacturers.

The rise of healthcare costs has affected American business competitiveness, as companies struggle with the burden of providing health insurance to employees. It has influenced employment patterns, as some workers remain in jobs they dislike purely for the health benefits – a phenomenon known as "job lock."

Healthcare costs have also become a major driver of income inequality. Medical debt is a leading cause of personal bankruptcy in the United States, and the threat of catastrophic medical expenses keeps many Americans in poverty or near-poverty despite having steady employment.

The Technological Revolution in Healthcare

The technological transformation of healthcare continues at an accelerating pace. Genetic medicine promises treatments tailored to individual patients' DNA. Artificial intelligence is revolutionizing diagnosis and treatment planning. Robot-assisted surgery is becoming increasingly common.

These advances create new possibilities but also raise new ethical questions. Who owns genetic information? How should we balance privacy concerns with the benefits of sharing medical data? When should artificial intelligence be trusted to make medical decisions?

The rise of consumer healthcare technology – from fitness trackers to home genetic tests – is also changing how Americans think about health. Increasingly, health is seen not just as the absence of disease but as something to be actively monitored and optimized.

The Future of American Healthcare

As we look to the future, several trends seem likely to shape the evolution of American healthcare:

First, the aging of the population will create increasing demands on the healthcare system. The number of Americans over 65 is projected to nearly double by 2060, creating new challenges for Medicare and the healthcare workforce.

Second, climate change is likely to create new health challenges, from extreme weather events to changing patterns of infectious disease. The healthcare system will need to adapt to these new threats while also reducing its own significant environmental impact.

Third, technological advancement will continue to create new possibilities for treatment while raising costs and ethical questions. Genetic medicine, artificial intelligence, and other emerging technologies will require new regulatory frameworks and ethical guidelines.

Fourth, the debate over healthcare reform is likely to continue. The current system's high costs and incomplete coverage create pressure for change, but the complexity of the system and the power of vested interests make reform challenging.

The Philosophical Implications

The evolution of American healthcare raises profound philosophical questions about human nature and society. What is health? Who deserves care? How should we balance individual autonomy with public health needs?

The American approach to healthcare reflects certain cultural values: individualism, technological optimism, and faith in market solutions. Yet it also reveals the tensions between these values and the realities of human vulnerability and interdependence.

The history of American healthcare shows how humans create and maintain complex social systems that shape life, death, and everything in between. Like money, nations, and religions, healthcare systems are human constructions – intersubjective realities that exist because we collectively believe in them and act accordingly.

Lessons from History

The history of healthcare in the United States offers several important lessons:

First, healthcare systems are shaped by historical accidents as much as by deliberate design. The link between employment and health insurance, for example, was an unintended consequence of wartime wage controls.

Second, technological progress doesn't automatically lead to better health outcomes. Improvements in medical science must be accompanied by effective systems for delivering care and promoting health.

Third, healthcare reflects and reinforces broader social inequalities. Access to healthcare has historically been influenced by race, class, gender, and geography, and these disparities persist today.

Fourth, healthcare systems are remarkably resistant to change once established. The interests created by existing arrangements – from insurance companies to healthcare providers to pharmaceutical companies – make fundamental reform challenging.

The Role of Culture

The American healthcare system cannot be understood without considering the broader cultural context in which it developed. American values of individualism, self-reliance, and distrust of centralized authority have shaped healthcare policy and practice in distinctive ways.

The American emphasis on cutting-edge technology and specialist care, rather than primary care and prevention, reflects cultural values about progress and innovation. The resistance to universal healthcare reflects deep-seated beliefs about individual responsibility and the proper role of government.

Even the way Americans think about health and illness has been shaped by cultural factors. The emphasis on personal responsibility for health, the medicalization of natural processes like aging and childbirth, and the focus on treating symptoms rather than underlying causes all reflect particular cultural assumptions.

The Global Context

The American healthcare system exists within a global context. Medical knowledge, technologies, and professionals flow across national boundaries. Pharmaceutical research and development is a global enterprise. Diseases don't respect national borders, as the COVID-19 pandemic dramatically demonstrated.

Comparing the American system with those of other nations reveals both common challenges and distinctive features. All developed nations face rising healthcare costs and aging populations. All must balance access, quality, and cost. But the United States has chosen a uniquely complex and expensive way to address these challenges.

The Human Story

Behind the statistics and systems, the history of American healthcare is fundamentally a human story. It's about people seeking to understand and control the mysteries of health and illness. It's about the evolution of the relationship between healer and patient, from the intimate connections of traditional medicine to the complex networks of modern healthcare.

It's also a story about power – who has access to care, who makes decisions about treatment, who profits from illness and healing. The evolution of American healthcare reflects broader changes in how society distributes power and resources.

Conclusion: The Continuing Evolution

The story of healthcare in the United States is far from over. The system continues to evolve in response to new technologies, changing demographics, and shifting social values. The COVID-19 pandemic has exposed both the strengths and weaknesses of the current system, potentially catalyzing further changes.

What seems certain is that healthcare will remain central to American society, economy, and politics. The decisions we make about healthcare reflect and shape our values, our social relationships, and our understanding of what it means to be human.

As we face the challenges of the future – from climate change to emerging diseases to aging populations – the healthcare system will need to continue evolving. The question is whether we can learn from history to create a system that better serves all Americans while maintaining the innovation and quality that characterize the best of American healthcare.

The history of healthcare in the United States shows how humans can create complex systems that transform the basic conditions of human existence. It demonstrates both the power of human ingenuity and the persistent challenges of organizing society to meet basic human needs. As we continue to grapple with these challenges, understanding this history becomes ever more important.