The Future of AI in Healthcare: A Double-Edged Sword

These thoughts reflect my own views and not those of my employer

The rapid evolution of artificial intelligence in healthcare is no longer a theoretical discussion but a reality shaping patient outcomes, clinical workflows, and medical research. Large language models (LLMs) and generative AI systems are now at the heart of this transformation, offering unprecedented potential to streamline diagnostics, improve physician efficiency, and personalize treatments. However, with this potential comes significant ethical, regulatory, and technological challenges. The question we now face is: will AI revolutionize medicine in a way that benefits all, or will it exacerbate the very inefficiencies it aims to resolve?

AI in Healthcare: Where We Stand Today

In the last decade, LLMs have evolved from simple text-processing tools into highly sophisticated models capable of analyzing clinical data, generating patient reports, assisting in drug discovery, and even providing differential diagnoses. Companies like Google DeepMind, OpenAI, and Microsoft are investing billions into training models that can outperform human clinicians in certain diagnostic tasks.

For instance, recent advancements in multimodal AI—which combines radiology, pathology, genomics, and electronic health record (EHR) data—are proving to be a game-changer. AI systems are now capable of detecting early-stage cancers in radiology scans more accurately than human radiologists, reducing misdiagnoses and potentially saving lives. Additionally, LLM-powered chatbots are being deployed in clinical settings to automate administrative tasks, transcribe physician notes, and even interact with patients to answer common health-related queries.

However, the real test of AI in medicine is not in controlled research environments but in real-world deployment, where factors like data bias, regulatory compliance, and physician trust become significant hurdles.

The Challenge of AI Bias and Data Limitations

One of the biggest criticisms of AI in healthcare is the issue of bias in training data. Healthcare AI models are trained on datasets that may not be representative of the entire patient population, leading to disparities in outcomes. For example, an AI model trained on predominantly Caucasian patients may be less accurate in diagnosing diseases in Black, Hispanic, or Asian populations, exacerbating existing health inequities.

In 2023, studies exposed that commercial AI-based dermatology tools performed significantly worse on darker skin tones, failing to detect melanomas as effectively as they did on lighter skin. This is not a technology problem but a data problem—without diverse and well-labeled datasets, AI risks reinforcing existing biases in medicine.

The solution? Stronger data governance, increased transparency, and regulatory oversight to ensure AI models are trained on inclusive and representative datasets. The U.S. FDA, European Medicines Agency (EMA), and World Health Organization (WHO) are already working on AI validation frameworks to tackle these issues, but enforcement remains inconsistent.

Regulatory Bottlenecks: Is AI Moving Faster Than Healthcare Can Handle?

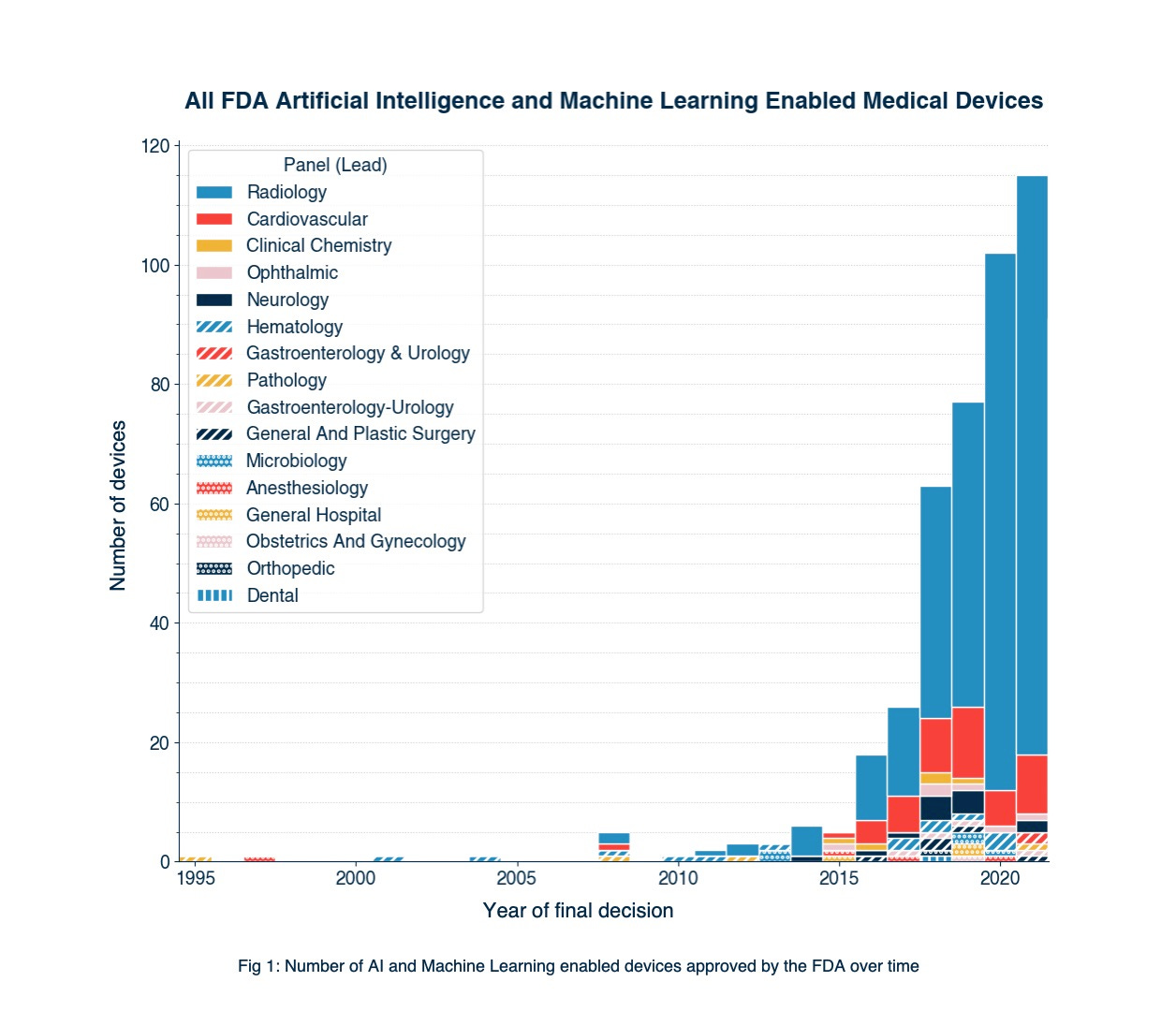

AI is advancing at an exponential rate, but healthcare regulations are lagging behind. The FDA’s Software as a Medical Device (SaMD) framework provides guidelines for AI-driven clinical applications, but most AI-powered tools are still operating in a regulatory gray zone.

One major challenge is the black-box nature of AI models. Unlike traditional medical devices where outcomes can be fully explained and traced, deep learning models generate recommendations without clear explanations of their decision-making process. This raises serious concerns about liability and accountability—who is responsible when an AI makes a life-altering mistake? The hospital? The software developer? The physician?

Regulatory agencies are pushing for explainable AI (XAI), a movement focused on making AI decision-making more transparent. But even with regulatory approvals, the adoption of AI in hospitals is slow, largely due to physician skepticism and a lack of AI literacy in medical training.

Generative AI in Drug Discovery: A Game Changer or Hype?

Beyond clinical decision support, generative AI is revolutionizing drug discovery. AI-first pharmaceutical companies like Insilico Medicine, Deep Genomics, and Atomwise are leveraging AI to identify novel drug compounds, reducing the time it takes to bring new drugs to market.

Traditional drug discovery often takes 10–15 years from concept to approval, with an average cost of $2.6 billion per drug. AI promises to cut this timeline in half by rapidly analyzing molecular structures and predicting their efficacy. In 2023, Insilico Medicine announced the first AI-discovered drug candidate entering Phase 1 clinical trials, a milestone that signals the potential of AI to accelerate precision medicine.

However, skeptics argue that AI-generated drug compounds still require rigorous validation in preclinical and clinical trials, and regulatory agencies have yet to define clear pathways for AI-generated pharmaceuticals. If AI is to truly revolutionize drug development, collaboration between biotech firms, regulators, and academia will be essential.

The Future of AI in Healthcare: What’s Next?

Looking ahead, several key developments will define AI’s role in medicine:

AI-First Hospitals:

Leading institutions like Mayo Clinic, Cleveland Clinic, and Stanford Medicine are already embedding AI into their workflows. Expect to see fully AI-assisted hospitals within the next decade, where AI manages scheduling, clinical triage, and even robotic-assisted surgeries.

Real-Time Patient Monitoring & AI-Powered Wearables:

Apple, Google, and Fitbit are racing to integrate AI-driven health monitoring into their wearable devices. Imagine a future where your smartwatch detects early signs of heart disease before symptoms appear and alerts your doctor in real time.

Regulation-Backed AI Guidelines:

As AI adoption grows, expect global AI regulation frameworks similar to the EU AI Act to define safety, ethical use, and compliance standards for healthcare AI.

AI-Driven Personalized Medicine:

AI will enable hyper-personalized treatments by analyzing patient genomics, lifestyle, and environmental factors, leading to tailored therapies for diseases like cancer, Alzheimer’s, and rare genetic disorders.

Conclusion: A Call for Responsible AI in Healthcare

AI in healthcare holds extraordinary promise, but its success depends on how responsibly it is developed, regulated, and integrated. While AI can augment clinicians, automate administrative tasks, and improve drug discovery, it must be done ethically, transparently, and inclusively.

The next five years will be a pivotal period—will AI make medicine more accessible, accurate, and efficient, or will it introduce new biases, risks, and regulatory nightmares? The answer lies in how governments, tech companies, and healthcare institutions collaborate to ensure AI serves all patients, not just those who fit the dataset.

The future of AI in medicine is not just about what it can do, but how we choose to use it.