Transforming Healthcare Insurance Through Knowledge Graphs and LLMs: A Comprehensive Strategy for Maximizing Value

Executive Summary

Healthcare insurance companies sit at a critical intersection of massive data volumes, complex regulatory requirements, and increasing pressure to deliver value to stakeholders. While Large Language Models (LLMs) represent a paradigm-shifting technology, their true potential in healthcare insurance can only be realized when paired with a robust knowledge graph foundation.

Knowledge graphs provide the semantic backbone that gives LLMs crucial context for healthcare insurance operations—connecting members, providers, claims, policies, clinical concepts, and regulatory requirements into an interlinked web of meaning. By implementing knowledge graphs and integrating them with LLMs through a structured approach, healthcare insurers can unlock unprecedented operational efficiencies, enhance decision-making capabilities, and create sustainable competitive advantages.

This comprehensive strategy outlines how healthcare insurers can restructure their data infrastructure around knowledge graph technology and leverage it to dramatically enhance LLM performance and value generation across the enterprise.

Introduction: The Knowledge Graph-LLM Opportunity

Beyond Data: The Knowledge Imperative

Healthcare insurance companies have historically focused on data management, but today's competitive landscape demands a shift toward knowledge management. Knowledge—the contextual understanding of how data elements relate to each other and what they mean in specific contexts—has become the critical differentiator.

Knowledge graphs represent a transformative approach to organizing information, moving beyond traditional relational data models to create explicit semantic connections between entities. When combined with the linguistic processing power of LLMs, knowledge graphs enable healthcare insurers to connect disparate data silos through semantic relationships that mirror real-world understanding, contextualize information across domains (from clinical concepts to benefit designs), enable reasoning over complex healthcare relationships, enhance LLM performance by providing structured knowledge, and future-proof data architecture through flexible semantic models.

The Knowledge Graph Advantage in Healthcare Insurance

Knowledge graphs are particularly valuable in healthcare insurance due to the domain's inherent complexity. The industry is characterized by multi-dimensional relationships between members, providers, employers, plans, benefits, claims, and clinical concepts—all critical for accurate processing. These relationships follow complex hierarchical patterns, such as diagnosis codes, procedure codes, and benefit structures. Additionally, compliance requirements change regularly and impact multiple operational areas, while provider networks have intricate relationships, specialties, and contractual arrangements. Understanding medical necessity, appropriate care, and health outcomes requires deep domain knowledge.

Knowledge graphs can represent these complexities explicitly, creating a semantic fabric that enhances both human and AI-driven processes.

The Knowledge Graph-LLM Synergy

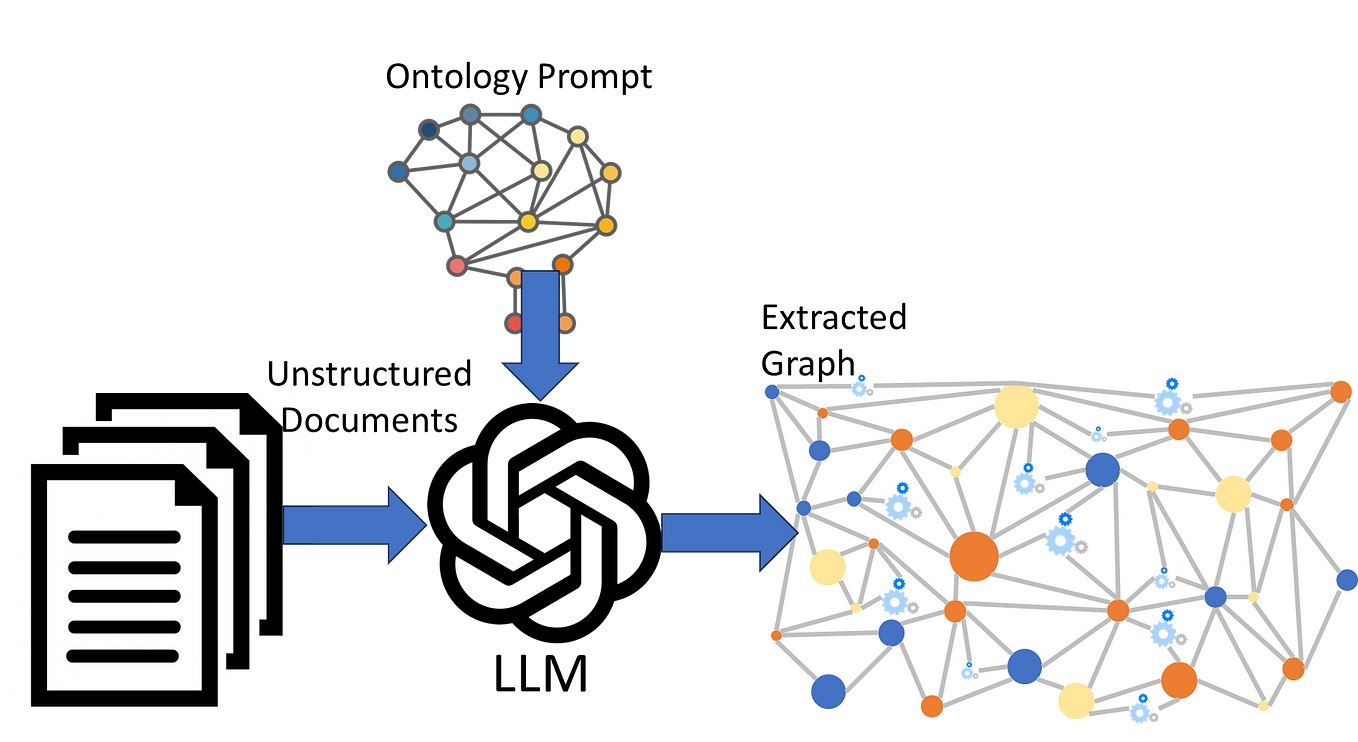

The combination of knowledge graphs and LLMs creates powerful synergies. LLMs enhanced by knowledge graphs can ground their responses in verified, structured organizational knowledge, provide context for ambiguous queries and requests, enable accurate entity resolution across conversational interfaces, and support fact-checking and verification of outputs.

Conversely, knowledge graphs can be enhanced by LLMs, which can extract entities and relationships from unstructured text to populate graphs, generate natural language explanations of graph patterns and insights, create user-friendly interfaces for complex graph queries, and support automatic knowledge graph expansion and enrichment.

Together, these technologies create a virtuous cycle where structured knowledge powers more accurate LLMs, and LLMs help build more comprehensive knowledge graphs.

The Transformation Imperative

For healthcare insurers, implementing knowledge graphs and LLMs is not merely a technology project but a fundamental business transformation. This transformation requires a strategic vision that reorients data strategy around knowledge and semantics, architectural redesign that builds flexible graph infrastructure alongside traditional systems, process reimagination that rethinks workflows to leverage connected knowledge, capability development that builds new skills in graph databases, ontology engineering, and AI, and change management that guides the organization toward knowledge-centric operations.

Current State Assessment for Knowledge Graph Readiness

Before implementing knowledge graphs, healthcare insurers must conduct a targeted assessment to identify readiness, opportunities, and challenges specific to graph-based approaches.

Data Source and Structure Assessment

A comprehensive assessment begins with cataloging the core entities and relationships that will form the foundation of your knowledge graph. This includes member entities (demographics, enrollment history, benefit elections, health status), provider entities (practitioners, facilities, networks, specialties, credentials), plan entities (products, benefit designs, formularies, coverage rules), claim entities (submissions, lines, adjustments, payments, appeals), clinical entities (diagnoses, procedures, medications, lab results), and operational entities (departments, processes, systems, documents).

Next, identify explicit and implicit relationships between these entities. Explicit relationships are formally defined connections in current systems (such as a member enrolled in plan), while implicit relationships are connections implied but not formally represented (such as a provider who specializes in a particular condition). Also look for derived relationships that can be inferred through analysis (like members with similar utilization patterns) and important connections not currently captured in any system.

Evaluate how well current data models capture domain complexity by examining normalization levels in relational databases, entity resolution challenges across systems, temporal data handling for time-based changes, hierarchical structures for classifications and taxonomies, and how business rules and policies are encoded. This analysis will identify areas where relational models struggle with complexity that graphs could better address.

Semantic Readiness Assessment

Assess the current state of terminology standardization by examining standard code systems implementation (ICD, CPT, HCPCS, NDC, SNOMED, LOINC), custom classifications and proprietary groupings, terminology governance processes, mapping completeness between different coding systems, and semantic consistency across the organization. Document both formal terminology systems and informal language patterns that impact semantic understanding.

Inventory current semantic structures including formal ontologies (explicitly defined semantic models with classes and properties), business taxonomies (classification systems used for organization and retrieval), semantic metadata (descriptions, definitions, and contextual information), reference data (standard lookup tables and their management processes), and semantic governance processes. These existing semantic assets can potentially be leveraged in knowledge graph development.

Identify areas where semantic connections are missing or inadequate, such as cross-domain connections between clinical, financial, and operational concepts, contextual knowledge of how concepts change meaning in different contexts, inferential gaps where important conclusions cannot be drawn from available data, terminology inconsistencies with conflicting or overlapping definitions, and knowledge silos with isolated pockets of domain expertise not formally captured. These gaps represent priority areas for knowledge graph implementation.

Technical Environment Assessment

Evaluate the current environment's readiness for graph technologies by assessing previous projects or pilots using graph databases, familiarity with graph query languages (SPARQL, Gremlin, Cypher), availability of graph visualization tools, infrastructure for large-scale graph algorithms, and existing APIs or integration patterns for graphs. Document both technology assets and skill gaps related to graph implementation.

Map the current data integration approach by examining integration patterns (ETL processes, APIs, event streams, data virtualization), integration challenges (volume, velocity, variety issues), current master data management solutions and their effectiveness, resources required for current data synchronization, and latency expectations for different use cases. Understanding this landscape helps determine how knowledge graphs will fit into existing data flows.

Assess how well current analytical tools could leverage knowledge graphs by evaluating SQL vs. graph query language support, the ability to represent and explore relationships with visualization tools, potential to incorporate graph features in AI/ML models, flexibility of reporting systems to use graph data sources, and self-service analytics tools that could benefit from semantic layers. This assessment identifies both constraints and opportunities for analytical leverage of knowledge graphs.

Use Case Identification and Prioritization

Identify business processes that rely heavily on connected knowledge, such as care management (member identification, care planning, intervention management), utilization management (prior authorization, clinical review, medical necessity determination), network management (provider recruitment, contracting, performance evaluation), risk adjustment (member condition identification, documentation improvement, submission), claims processing (policy interpretation, benefit determination, payment calculation), and fraud detection (pattern recognition, relationship analysis, anomaly detection). For each process, document how improved semantic connections could enhance outcomes.

Identify specific challenges that knowledge graphs could address, including data context issues (problems due to missing contextual information), relationship complexity (challenges in understanding multi-faceted relationships), knowledge accessibility (difficulties in finding relevant information), decision consistency (variations in interpretations and decisions), and inference limitations (inability to draw connections between related concepts). These pain points can form the basis for high-value initial use cases.

Estimate potential value creation opportunities including efficiency gains from reduction in manual research and context-switching, decision quality improvements through more consistent, evidence-based decisions, revenue opportunities from enhanced risk adjustment and payment accuracy, cost avoidance through reduced waste and inappropriate care, and strategic advantages from new capabilities enabled by connected knowledge. Quantify these opportunities where possible to support investment justification.

Organizational Readiness Assessment

Evaluate the organization's approach to knowledge management by examining how effectively institutional knowledge is documented, mechanisms for distributing and accessing knowledge, processes for validating and updating knowledge, attitudes toward knowledge sharing and reuse, and metrics for knowledge quality and utilization. This assessment reveals cultural factors that may impact knowledge graph adoption.

Catalog existing skills relevant to knowledge graph implementation, including experience with semantic modeling and ontology development, expertise in knowledge representation and reasoning, skills in developing and maintaining metadata schemas, subject matter experts who can validate semantic models, and technical skills in graph database implementation. Identify both available resources and critical skill gaps.

Assess organizational readiness for knowledge-centric transformation by evaluating leadership understanding and support for semantic approaches, current attitudes toward data sharing and integration, willingness to adopt new approaches and technologies, cross-functional cooperation and communication patterns, and track record with previous transformation initiatives. These factors will inform change management strategies for knowledge graph implementation.

Knowledge Graph Foundation: Architecture and Implementation

With assessment complete, healthcare insurers must establish a robust knowledge graph foundation that can support enterprise-scale implementation and LLM integration.

Knowledge Graph Conceptual Architecture

Establish guiding principles for knowledge graph architecture that prioritize meaning and relationships over physical storage, maintain unified identities across disparate sources, accommodate changing knowledge without rebuilding, balance centralized governance with distributed expertise, separate domain knowledge from application-specific needs, support both explicit and derived relationships, balance semantic richness with query efficiency, and combine rigorous ontology with pragmatic property graphs. These principles ensure the architecture will support both current and future needs.

Design a layered architecture that supports flexible evolution by incorporating a data layer of raw sources and their native formats, an integration layer with mechanisms for extracting and normalizing data, an entity layer with consolidated entities and properties, a relationship layer with explicit connections between entities, a semantic layer with ontological classifications and meanings, an inference layer with derived relationships and knowledge, and an application layer with use case-specific views and interfaces. This layered approach allows different aspects of the knowledge graph to evolve at different rates while maintaining overall coherence.

Determine the optimal topology for the enterprise knowledge graph by choosing between a centralized model (single authoritative graph for the entire enterprise), a federated model (coordinated domain-specific graphs with linkages), a hybrid model (core enterprise graph with specialized extensions), or materialized versus virtual approaches (physical graph storage vs. on-demand construction). The choice of topology should balance governance needs, performance requirements, and organizational structure.

Technical Architecture Decisions

Evaluate and select appropriate graph database technology from options including property graph databases (Neo4j, Amazon Neptune, TigerGraph), RDF triple stores (AllegroGraph, Stardog, GraphDB), multi-model databases (ArangoDB, CosmosDB, OrientDB), graph processing frameworks (Apache TinkerPop, GraphX), and cloud-native versus self-hosted solutions. Consider factors such as query language support, scalability characteristics, inference capabilities, integration options, performance benchmarks, total cost of ownership, and community and vendor support.

Design the physical storage architecture by addressing sizing and scalability (initial capacity and growth projections), partitioning strategy, replication model for high availability and disaster recovery, caching approach for performance optimization, storage tiering to balance performance and cost, backup and recovery to ensure knowledge persistence, and environment strategy for development, testing, and production separation. Document architecture decisions and their rationales to guide implementation.

Establish infrastructure for graph analytics and algorithms, including graph compute engines for running algorithms at scale, algorithm libraries with reusable graph algorithms, distributed processing frameworks for parallel computation, memory management approaches for handling large graphs efficiently, query optimization techniques, and visualization processing for rendering large graphs. This infrastructure enables complex knowledge discovery beyond basic graph queries.

Knowledge Graph Foundation Implementation

Develop the core schema for the enterprise knowledge graph, including entity types (primary objects and their properties), relationship types (standard connections with cardinality and directionality), property definitions (attributes with data types and constraints), identifier strategy for uniquely identifying graph elements, temporal model for representing time-based changes, and provenance model for tracking the source and confidence of graph assertions. The schema should balance standardization with flexibility to accommodate future needs.

Establish the initial knowledge population process by identifying authoritative systems of record, defining entity resolution rules for consolidating duplicates, creating extract and transform processes for converting source data to graph format, implementing validation and quality checks, designing incremental update mechanisms, and tracking changes to the knowledge base over time. Document detailed mappings between source systems and graph structures.

Implement the technical foundation by setting up development, test, and production environments, deploying and configuring graph database software, implementing access controls and security measures, setting up monitoring instrumentation, configuring regular backup processes, establishing disaster recovery procedures, and designing scaling mechanisms for future growth. Follow infrastructure-as-code practices to ensure reproducibility and consistency.

Develop interfaces for accessing the knowledge graph, including RESTful and GraphQL interfaces for applications, reusable queries for common access patterns, language-specific client libraries for easy integration, performance tuning for common access patterns, access controls at the API and query level, caching strategies to reduce database load, and comprehensive reference documentation for developers. These interfaces bridge the gap between the knowledge graph and consuming applications.

Knowledge Graph Management and Operations

Establish processes for keeping the graph current by identifying updates in source systems, determining synchronization frequency (real-time, near-real-time, or batch), handling contradictory information from different sources, managing point-in-time snapshots for analysis, tracking changes to graph content over time, and performing periodic validation against authoritative sources. Document detailed synchronization workflows for each major data source.

Implement ongoing performance management by monitoring query execution times and resource usage, balancing concurrent access with background processes, tuning indexes based on access patterns, implementing multi-level caching for frequent queries, optimizing inefficient query patterns, projecting future resource needs based on growth, and regularly benchmarking key operations. Establish baselines and thresholds for performance metrics to enable proactive optimization.

Develop operational practices specific to knowledge graphs, including processes for safely changing graph structure, CI/CD approaches for graph components, validation framework for graph integrity and functionality, synchronization across development/test/production environments, coordination of graph changes with application updates, procedures for recovering from problematic deployments, and automation for keeping technical documentation current. These practices ensure the knowledge graph can evolve reliably over time.

Data Semantics: Ontologies and Taxonomies for Healthcare Insurance

A robust semantic layer is essential for maximizing knowledge graph value. Healthcare insurers must develop comprehensive ontologies and taxonomies that formalize domain knowledge and enable powerful inferencing capabilities.

Ontology Strategy and Planning

Determine the boundaries and focus of ontological development by defining which aspects of healthcare insurance to model, deciding on the level of detail in different domain areas, considering historical, current, and future states, establishing organizational or regional scope, aligning with specific applications driving ontology requirements, and planning areas for future expansion. Document scope decisions and their rationales to guide development.

Choose appropriate methodologies for ontology development, such as a top-down approach (starting with high-level concepts and refining), bottom-up approach (starting with specific instances and generalizing), middle-out approach (balancing conceptual and practical considerations), collaborative development methods for engaging domain experts, agile ontology with iterative approaches to evolution, and formal versus lightweight approaches that balance logical rigor with pragmatic utility. The methodology should align with organizational culture and available expertise.

Establish governance mechanisms for ontology management, including clear accountability for ontology components, processes for reviewing and approving modifications, validation procedures for ontological consistency, version control for managing evolution over time, collaboration framework for stakeholder contributions, and conflict resolution approaches for handling competing definitions. Document governance processes and roles to ensure sustainable ontology development.

Healthcare Insurance Domain Ontologies

Develop semantic models for members and populations, including demographic concepts (age, gender, location, socioeconomic factors), enrollment concepts (coverage periods, plan selections, eligibility rules), health status concepts (conditions, risk factors, functional status), preference concepts (communication channels, language, engagement patterns), relationship concepts (family structures, caregivers, social determinants), and segment concepts (risk groups, clinical cohorts, behavioral classifications). Include standard terminology alignments such as CDC demographics and social determinants of health frameworks.

Create semantic models for providers and networks, including provider type concepts (practitioners, facilities, organizations, groups), specialty concepts (clinical specialization, certification areas), network concepts (participation status, tier designations, exclusivity), contractual concepts (agreement terms, payment models, performance targets), location concepts (service areas, accessibility, geographic relationships), and capability concepts (services offered, equipment, special programs). Align with industry standards like NUCC taxonomy, NPI categorization, and facility types.

Develop semantic models for insurance products and benefits, including product concepts (plan types, market segments, regulatory categories), benefit concepts (covered services, limitations, exclusions), financial concepts (premium, cost-sharing, maximums, accumulators), formulary concepts (drug coverage, tiers, utilization management), network concepts (in-network, out-of-network, referral requirements), and temporal concepts (effective periods, waiting periods, grandfathering). Map to industry standards including HIPAA benefit categories and ACA metallic levels.

Create semantic models for clinical knowledge, including condition concepts (diseases, disorders, symptoms, functional limitations), procedure concepts (interventions, surgeries, therapies, diagnostic tests), medication concepts (drugs, classes, formulations, administration routes), result concepts (laboratory values, vital signs, assessment scores), anatomical concepts (body systems, structures, functions), and severity concepts (stages, grades, risk levels, acuity measures). Leverage clinical standards including SNOMED CT, RxNorm, LOINC, and clinical classification systems.

Develop semantic models for claims and encounters, including claim concepts (submissions, adjustments, denials, payments), encounter concepts (visit types, settings, admission types), billing concepts (charges, allowed amounts, negotiated rates), coding concepts (diagnosis, procedure, revenue codes and relationships), payment concepts (reimbursement methodologies, adjudication rules), and timeline concepts (dates of service, submission, processing, payment). Align with industry standards including claim formats, UB and CMS-1500 concepts.

Create semantic models for regulatory concepts, including regulatory authority concepts (federal, state, accreditation bodies), requirement concepts (mandates, restrictions, reporting obligations), timeframe concepts (effective dates, compliance deadlines, reporting periods), jurisdiction concepts (geographic applicability of regulations), documentation concepts (required records, retention periods, formats), and penalty concepts (sanctions, corrective actions, monetary consequences). Map to specific regulatory frameworks such as HIPAA, ACA, and state-specific requirements.

Ontology Integration and Alignment

Establish connections between domain ontologies by modeling member-provider relationships (treatment relationships, PCP assignments), member-benefit relationships (eligibility rules, coverage exceptions), provider-benefit relationships (network participation, payment terms), clinical-benefit relationships (medical necessity, coverage criteria), regulatory-operational relationships (compliance impact on processes), and claims-clinical relationships (diagnosis-procedure relationships, episodes). These cross-domain relationships enable powerful inferences across traditionally siloed areas.

Connect internal ontologies to industry standards through clinical terminology mapping (ICD, CPT, HCPCS, NDC, SNOMED, LOINC), administrative terminology mapping (place of service, type of bill, revenue codes), benefit terminology mapping (HIPAA benefit categories, service types), provider terminology mapping (specialty codes, facility types, taxonomies), member terminology mapping (demographics, language, ethnicity standards), and regulatory terminology mapping (standard identifiers for requirements). Document both exact matches and partial or approximate alignments.

Consider utilizing upper ontologies for better integration, such as BFO (Basic Formal Ontology) with foundational distinctions and categories, DOLCE (Descriptive Ontology for Linguistic and Cognitive Engineering) with entity types and qualities, UFO (Unified Foundational Ontology) as a conceptual modeling foundation, IOF (Industrial Ontology Foundry) with business and operational concepts, Schema.org with web-standard entities and relationships, and FIBO (Financial Industry Business Ontology) with financial concepts applicable to insurance. Upper ontologies provide consistent philosophical foundations across domains.

Ontology Implementation and Management

Transform conceptual models into formal ontologies by selecting an ontology language (OWL, RDFS, SKOS, or custom approaches), developing class hierarchies with taxonomic structure, defining properties with relationships and constraints, formulating logical rules and restrictions as axioms, creating example individuals for validation, and implementing annotations with metadata for human understanding. Balance expressivity with practical usability based on implementation goals.

Create comprehensive documentation for the ontologies, including concept dictionaries with definitions and usage guidance, relationship catalogs with domains and ranges, visual representations showing key concept hierarchies, change history documenting evolution over time, usage examples showing how concepts apply in different contexts, and known limitations identifying areas of incompleteness or uncertainty. Documentation should serve both technical implementers and domain experts.

Establish processes for ensuring ontology quality, including logical consistency checking to identify contradictions, competency question validation to test against intended use cases, expert review by domain specialists, instance testing to verify behavior with example data, query testing to ensure ontology supports required queries, and integration testing to validate connections between domains. Document validation results and address identified issues before deployment.

Develop processes for ongoing ontology evolution, including change request processes for proposing and evaluating modifications, impact analysis to assess effects of changes, version control for managing multiple ontology versions, deprecation approach for handling obsolete concepts gracefully, extension mechanisms for adding new domains or concepts, and release management to coordinate ontology updates with applications. Ensure ontologies can evolve while maintaining backward compatibility.

Knowledge Graph Population and Enrichment

Building and maintaining a comprehensive knowledge graph requires systematic processes for initial population, ongoing enrichment, and quality assurance. Healthcare insurers must implement robust mechanisms for transforming diverse data into connected knowledge.

### Data Source Integration Strategy

Prioritize systems for knowledge graph integration by identifying authoritative systems of record for key entities, sources with particularly valuable relationship information, systems with important contextual information, systems requiring immediate graph updates, sources of longitudinal information, and third-party information for graph enrichment. Develop an integration sequence based on value, complexity, and dependencies.

Design appropriate extraction methods for each source, such as reading directly from source databases, using available application interfaces, capturing change events in real-time, processing exports and feeds, identifying and extracting only changes, or pulling specific subsets of data. Document technical details including authentication, authorization, and rate limits.

Develop approaches for converting source data to graph format by defining entity matching rules to identify the same entity across sources, property mapping to translate source attributes to graph properties, relationship extraction to identify connections between entities, semantic classification to assign ontological types to entities, data cleansing to handle inconsistencies and quality issues, and temporal transformation to manage time-based aspects of data. Create detailed mapping specifications for each source system.

Entity Resolution and Linking

Establish systematic approaches to entity resolution by defining matching criteria for determining when records represent the same entity, methods for quantifying match certainty with confidence levels, process for resolving ambiguous matches, authoritative sources for entity information, mechanisms for maintaining stable identifiers over time, and approaches for addressing incorrect previous resolutions. Develop different resolution rules for various entity types such as members, providers, and claims.

Create processes for connecting entities across domains by identifying direct references between entities, inferring connections from behavior or context, connecting entities based on time relationships, linking based on geographic proximity, connecting conceptually related entities, and assigning confidence to potential connections. Document both deterministic and probabilistic linking approaches.

Implement ongoing identity governance with a central repository of resolved entities, roles responsible for identity quality, process for handling contradictory identity claims, tracking changes to entity resolution, process for managing resolution failures, and mechanisms for users to report identity issues. This governance ensures consistent entity resolution over time.

Knowledge Extraction from Unstructured Data

Develop capabilities for extracting knowledge from text by implementing document preprocessing to standardize formats and encoding, text segmentation to break documents into manageable units, named entity recognition to identify key elements (people, organizations, concepts), relationship extraction to determine connections between entities, concept mapping to align extracted terms with ontologies, and sentiment and intent analysis to capture subjective aspects. Apply these capabilities to clinical notes, correspondence, call transcripts, and other text sources.

Create processes for extracting knowledge from semi-structured sources by parsing standardized forms with known layouts, extracting data from repeating sections in documents, identifying tabular information in various formats, extracting relationships implied by document structure, capturing implicit hierarchical information, and identifying document sequence and workflows. Apply these techniques to scanned forms, provider directories, benefit summaries, and other semi-structured content.

Implement processes for human-in-the-loop verification by establishing verification workflows for extracted knowledge, prioritizing validation based on confidence scores and impact, designing intuitive interfaces for subject matter expert review, capturing reasoning behind human decisions for learning, providing feedback to extraction algorithms for improvement, and developing metrics to track verification efficiency and impact. This human-machine partnership ensures quality while continuously improving automation.

Knowledge Graph Enrichment and Refinement

Develop processes for inferring new relationships from existing data by applying transitive reasoning to extend explicit relationships, analyzing co-occurrence patterns to identify potential connections, applying similarity measures to group related entities, using temporal analysis to infer sequencing and causality, implementing graph algorithms (clustering, centrality, path finding) to discover patterns, and incorporating domain-specific inference rules based on industry knowledge. These techniques expand the graph beyond explicitly stated connections.

Create mechanisms for enhancing the graph with external knowledge by integrating public healthcare taxonomies and ontologies, incorporating geographic and demographic reference data, adding regulatory and compliance knowledge bases, linking to public provider information, enriching with drug interaction and contraindication data, and connecting to social determinants of health datasets. External knowledge provides broader context and fills gaps in internal data.

Implement processes for continuous knowledge graph refinement by analyzing query patterns to identify high-value areas for enhancement, capturing user feedback on graph completeness and accuracy, monitoring data quality metrics to identify improvement opportunities, performing regular ontology alignment to identify semantic gaps, conducting formal knowledge validation with domain experts, and implementing graph testing and validation frameworks. This continuous improvement ensures the knowledge graph evolves to meet changing needs.

Knowledge Quality Management

Establish processes for defining and measuring knowledge quality by developing comprehensive knowledge quality dimensions (accuracy, completeness, consistency, timeliness, relevance), creating measurable metrics for each quality dimension, establishing baselines and targets for quality levels, implementing automated quality monitoring, defining quality standards for different knowledge domains, and creating quality dashboards for tracking and reporting. These measures ensure knowledge quality is objectively managed.

Implement processes for detecting and addressing quality issues by applying graph consistency checks to identify logical contradictions, implementing outlier detection to find anomalous patterns, analyzing temporal consistency to identify impossible sequences, validating against business rules and domain constraints, comparing with authoritative sources to detect discrepancies, and monitoring relationship density to identify possible missing connections. These detection mechanisms help maintain graph integrity.

Create systematic approaches to knowledge curation and correction by establishing workflows for addressing identified quality issues, implementing versioning to track knowledge corrections, capturing provenance for quality improvements, providing tools for expert curation of graph content, prioritizing corrections based on business impact, and measuring and reporting on quality improvement activities. These curation processes ensure the graph remains a trusted source of organizational knowledge.

LLM Integration with Knowledge Graphs

To maximize value, healthcare insurers must create effective integrations between their knowledge graphs and LLMs. This integration creates a bidirectional flow where knowledge graphs ground LLMs in organizational reality, and LLMs help make knowledge graphs more accessible and comprehensive.

LLM Grounding and Retrieval-Augmented Generation

Develop a comprehensive retrieval strategy for LLM grounding that determines when to retrieve knowledge graph information, what types of information to prioritize, how much context to include with each retrieval, how to handle ambiguous queries that could match multiple graph elements, optimal timing of retrievals during conversation flows, and balancing performance with relevance in retrieval operations. This strategy ensures LLMs have appropriate context for healthcare insurance interactions.

Implement knowledge graph query mechanisms for LLM context by developing natural language to graph query translation, implementing entity recognition and disambiguation in user queries, creating optimized graph traversal patterns for common question types, designing relevance ranking for graph query results, implementing response size management for retrieval efficiency, and establishing caching mechanisms for frequently accessed knowledge. These mechanisms connect user intentions to relevant graph knowledge.

Create processes for integrating retrieved knowledge into LLM prompts by designing prompt templates that effectively incorporate graph knowledge, implementing dynamic prompt construction based on retrieval results, determining proper attribution and citation of graph information, developing fallback strategies when relevant knowledge isn't found, managing context window limitations with prioritization rules, and implementing mechanisms to resolve contradictions between different knowledge sources. These integration processes ensure LLMs leverage organizational knowledge effectively.

Knowledge Graph Navigation and Explanation

Develop capabilities for LLM-powered graph exploration by creating natural language interfaces for graph navigation, implementing conversational memory for exploration sessions, generating contextual suggestions for exploration paths, developing visualization descriptions for complex relationships, creating explanations of inference paths and reasoning chains, and implementing interactive filtering and focusing mechanisms. These capabilities make complex knowledge accessible through natural conversation.

Create systems for LLM-generated explanations of graph patterns by implementing narrative generation for graph analysis results, developing comparison explanations between similar entities or patterns, creating counterfactual explanations for "what if" scenarios, generating explanations at different levels of detail for different audiences, implementing causal narrative construction from temporal patterns, and developing explanation templates for common graph structures. These explanations translate complex graph insights into actionable narratives.

Establish methods for interactive knowledge refinement through dialogue by creating conversational interfaces for knowledge validation, implementing question generation to fill identified knowledge gaps, developing dialogue flows for entity resolution confirmation, creating interactive disambiguation mechanisms for ambiguous concepts, implementing feedback collection during knowledge interactions, and developing explanation and correction workflows for identified inconsistencies. These interactive capabilities improve knowledge quality through natural interaction.

Knowledge Graph Construction and Enhancement with LLMs

Develop processes for LLM-assisted knowledge extraction by implementing text analysis for entity and relationship extraction, creating mechanisms for processing unstructured documents and notes, developing specialized extraction for healthcare-specific documents, implementing validation workflows for extracted knowledge, creating feedback mechanisms to improve extraction quality, and establishing performance metrics for knowledge extraction processes. These capabilities transform unstructured content into structured knowledge.

Create systems for LLM-based knowledge validation and enrichment by implementing consistency checking between text and graph representation, developing plausibility assessment for new knowledge assertions, creating intelligent gap detection in knowledge representation, implementing relationship suggestion based on similar patterns, developing zero-shot and few-shot learning for new relationship types, and establishing confidence scoring for LLM-derived knowledge. These systems help maintain knowledge quality while expanding coverage.

Establish processes for domain knowledge distillation into graphs by implementing workflows for expert knowledge elicitation through conversation, creating knowledge formalization from natural language descriptions, developing methods for capturing procedural knowledge and decision logic, implementing contradiction detection between sources, creating versioning for evolving knowledge, and establishing traceability between source materials and formalized knowledge. These processes transform tacit expertise into explicit, structured knowledge.

Hybrid Reasoning Systems

Develop architectures for combined symbolic and neural reasoning by creating interfaces between graph reasoning and LLM inference, implementing explanation generation for hybrid reasoning processes, developing uncertainty management across reasoning types, creating reasoning workflow orchestration, implementing fallback mechanisms when one approach fails, and establishing performance metrics for hybrid reasoning. These architectures leverage the strengths of both structured knowledge and neural language understanding.

Create specialized healthcare reasoning capabilities by implementing medical necessity determination using hybrid approaches, developing benefit interpretation combining policy rules and contextual understanding, creating provider matching based on complex clinical and network criteria, implementing risk stratification combining multiple knowledge sources, developing care gap identification through pattern recognition, and creating fraud detection combining rules and anomaly detection. These domain-specific capabilities address critical healthcare insurance functions.

Establish processes for continuous learning and improvement by implementing feedback loops from user interactions to knowledge enhancement, creating performance tracking across reasoning approaches, developing automated testing against known scenarios, implementing monitoring for reasoning drift or degradation, creating periodic revalidation of critical reasoning paths, and establishing governance for hybrid reasoning evolution. These processes ensure the system becomes more capable and reliable over time.

Technical Architecture for Graph-Enhanced LLMs

Healthcare insurers need a robust technical architecture that supports the integration of knowledge graphs and LLMs at enterprise scale. This architecture must balance performance, security, compliance, and flexibility.

Architectural Pattern Selection

Develop a comprehensive integration architecture by evaluating retrieval-augmented generation approaches for LLM enhancement, considering agent-based architectures for complex workflows, implementing tool-using LLM frameworks for system access, developing query decomposition for complex information needs, creating multi-step reasoning pipelines, implementing LLM orchestration for coordinating multiple models, developing specialized vs. general-purpose LLM approaches, and creating hybrid architectures combining multiple patterns. These architectural decisions shape the overall system capabilities.

Create data flow designs for knowledge-enhanced LLM operations by mapping query/request flows from users to systems, designing retrieval flows from knowledge graph to LLM context, implementing result processing flows for LLM outputs, creating feedback capture for continuous improvement, developing real-time vs. batch processing approaches, implementing caching strategies for performance optimization, and creating fallback paths for system components. These flows ensure efficient system operation.

Establish platform layer selections by evaluating vector database options for semantic retrieval, considering graph database technologies for knowledge representation, implementing document storage for unstructured content, creating model serving infrastructure for LLMs, developing API management for service interfaces, implementing workflow engines for complex processes, and creating observability platforms for system monitoring. These technology selections form the foundation of the solution architecture.

Performance and Scalability Design

Develop a comprehensive performance engineering approach by implementing performance testing frameworks for LLM-graph interactions, creating baseline metrics for critical operations, establishing performance budgets for key user journeys, developing scaling strategies for different components, implementing load balancing and distribution approaches, creating caching hierarchies for frequently accessed information, and developing query optimization techniques. These approaches ensure the system remains responsive under varying loads.

Create resource optimization strategies by implementing right-sizing for different LLM use cases, developing cost-effective retrieval strategies, creating multi-tier storage for knowledge graph data, implementing compute resource management, developing batch processing for non-real-time needs, creating model quantization and optimization approaches, and implementing cloud resource management. These strategies balance performance and cost.

Establish reliability and availability designs by implementing circuit breakers for failure isolation, creating graceful degradation modes, developing automated recovery mechanisms, implementing redundancy for critical components, creating comprehensive monitoring and alerting, developing synthetic transaction monitoring, and implementing disaster recovery processes. These designs ensure business continuity even during system disruptions.

Security and Compliance Architecture

Develop comprehensive security controls by implementing identity and access management for graph and LLM systems, creating role-based access at both data and function levels, developing data classification and handling policies, implementing encryption for data at rest and in transit, creating audit logging and monitoring for critical operations, implementing anomaly detection for unusual access patterns, and developing secure API design patterns. These controls protect sensitive healthcare information.

Create privacy-enhancing designs by implementing data minimization in LLM interactions, developing privacy-preserving query techniques, creating de-identification for training and tuning data, implementing purpose limitation controls, developing consent management integration, creating privacy impact assessment frameworks, and implementing purpose-specific LLMs with appropriate data exposure. These designs protect member privacy while enabling valuable functionality.

Establish healthcare-specific compliance frameworks by implementing HIPAA-aligned controls for PHI handling, creating HITRUST compliance mapping, developing audit mechanisms for regulatory requirements, implementing evidence collection for compliance demonstration, creating compliance monitoring dashboards, developing regulatory change management processes, and implementing model governance frameworks. These frameworks ensure the organization meets its compliance obligations.

Implementation Framework and Tools

Develop a comprehensive technology stack selection by evaluating graph database technologies, considering vector database options for semantic search, implementing document processing and management tools, creating LLM integration frameworks, developing API management platforms, implementing workflow orchestration tools, creating observability and monitoring solutions, and developing DevOps and deployment tooling. These selections create a cohesive technology ecosystem.

Create development and deployment methodologies by implementing MLOps for LLM management, developing knowledge graph versioning approaches, creating continuous integration for graph data and schema, implementing testing frameworks for hybrid systems, developing canary deployment approaches for risk mitigation, creating rollback mechanisms for problematic deployments, and implementing environment consistency across development stages. These methodologies ensure reliable delivery of complex systems.

Establish API and integration standards by developing consistent API design patterns, creating standardized error handling and response formats, implementing rate limiting and throttling policies, developing authentication and authorization standards, creating comprehensive API documentation, implementing API versioning approaches, and developing integration patterns for external systems. These standards facilitate system interoperability and developer productivity.

User Experience and Interface Design

Create natural language interaction designs by developing conversation design frameworks for healthcare contexts, implementing entity disambiguation in conversational flows, creating response generation standards for consistency, developing context management for multi-turn interactions, implementing persona and tone guidelines, creating multi-modal interaction patterns, and developing accessibility standards for diverse users. These designs ensure effective human-system communication.

Develop specialized interfaces for different user types by creating clinician-focused interface patterns, implementing administrator and analyst workbenches, developing member-facing simplified interactions, creating underwriter and claims processor interfaces, implementing care manager workspaces, developing compliance officer dashboards, and creating executive insights interfaces. These specialized interfaces address the unique needs of different stakeholders.

Establish knowledge visualization approaches by implementing relationship visualization patterns, creating interactive exploration interfaces, developing path and provenance visualization, implementing temporal pattern displays, creating comparative visualization techniques, developing anomaly highlighting mechanisms, and implementing detail-on-demand patterns for complex information. These approaches make complex knowledge understandable and actionable.

Governance and Compliance for Knowledge Graphs

Effective governance is essential for ensuring that knowledge graphs and LLMs deliver sustainable value while managing risks appropriately. Healthcare insurers must establish comprehensive governance frameworks tailored to their regulatory environment.

Data and Knowledge Governance

Develop a comprehensive governance framework by establishing a data and knowledge governance council, creating role definitions and responsibilities, implementing decision rights and accountability models, developing policy frameworks for knowledge assets, creating standards for knowledge quality and consistency, implementing metadata governance approaches, and developing measurement frameworks for governance effectiveness. These structures ensure appropriate oversight of knowledge assets.

Create knowledge quality management processes by implementing quality metrics and standards, developing data profiling and quality assessment, creating remediation workflows for quality issues, implementing subject matter expert review processes, developing automated quality monitoring, creating quality improvement initiatives, and implementing quality reporting and dashboards. These processes maintain the integrity of the knowledge graph.

Establish lifecycle management approaches by developing processes for knowledge onboarding and validation, creating deprecation and retirement procedures, implementing versioning and change control, developing impact assessment for proposed changes, creating traceability from sources to knowledge, implementing knowledge update propagation, and developing archiving and retention policies. These approaches ensure knowledge remains current and reliable.

Ethics and Responsible AI

Develop AI ethics guidelines specific to healthcare insurance by establishing principles for fair and unbiased systems, creating frameworks for transparency and explainability, implementing testing procedures for bias detection, developing processes for addressing identified biases, creating documentation standards for algorithms and models, implementing ethical review processes for high-risk applications, and developing ongoing monitoring for ethical concerns. These guidelines ensure technology serves all members equitably.

Create responsible AI development processes by implementing diverse representation in development teams, creating inclusive design methodologies, developing testing approaches with diverse populations, implementing fairness metrics and monitoring, creating ethics review boards for complex cases, developing root cause analysis for adverse events, and implementing continuous improvement processes for ethical considerations. These processes embed ethical considerations throughout development.

Establish oversight mechanisms for LLM and knowledge graph applications by developing risk assessment frameworks for AI applications, creating tiered governance based on risk levels, implementing audit mechanisms for high-risk functions, developing explainability requirements based on use case, creating ethical incident response procedures, implementing user feedback collection for system behavior, and developing transparency reporting appropriate to different stakeholders. These mechanisms ensure appropriate human oversight of automated systems.

Regulatory Compliance and Risk Management

Develop healthcare-specific compliance frameworks by mapping regulatory requirements to system features, creating documentation trails for compliance demonstration, implementing privacy impact assessments for new capabilities, developing compliance testing protocols, creating regulatory change monitoring processes, implementing evidence collection automation, and developing compliance reporting dashboards. These frameworks ensure adherence to healthcare's complex regulatory environment.

Create comprehensive risk management approaches by implementing risk identification frameworks for knowledge and AI, developing risk assessment methodologies, creating risk mitigation strategies and controls, implementing ongoing risk monitoring, developing incident management and response, creating risk acceptance and escalation processes, and implementing risk reporting for leadership visibility. These approaches ensure risks are identified and managed appropriately.

Establish model governance specific to healthcare applications by developing model inventory and cataloging, creating model documentation standards, implementing model validation procedures, developing model performance monitoring, creating model update and retraining processes, implementing model audit trails and lineage tracking, and developing model retirement procedures. These governance processes ensure appropriate oversight of LLM capabilities.

Security and Privacy Management

Develop knowledge graph security controls by implementing access control frameworks for graph data, creating role-based security models, developing attribute-based access for fine-grained control, implementing query-level security enforcement, creating security monitoring for graph access, developing security incident response processes, and implementing security testing and validation. These controls protect sensitive information within the knowledge graph.

Create LLM security and privacy frameworks by implementing prompt injection prevention, developing data leakage prevention, creating authentication and authorization for LLM access, implementing content filtering and safety measures, developing privacy-preserving interaction patterns, creating audit trails for LLM interactions, and implementing security testing specific to LLM vulnerabilities. These frameworks address the unique security challenges of LLMs.

Establish healthcare-specific privacy controls by implementing member consent management, creating data minimization approaches, developing de-identification techniques for different contexts, implementing purpose limitation controls, creating privacy-preserving analytics approaches, developing privacy impact assessment methodologies, and implementing privacy monitoring and reporting. These controls protect member information while enabling valuable insights.

High-Impact Use Cases for Graph-Enhanced LLMs

Healthcare insurers should prioritize use cases that deliver significant value by leveraging the unique capabilities of knowledge graphs and LLMs together. These high-impact applications address core business challenges while building momentum for broader transformation.

Clinical and Care Management Enhancement

Develop personalized care management capabilities by implementing intelligent member risk stratification, creating personalized intervention recommendations, developing care gap identification and prioritization, implementing treatment appropriateness evaluation, creating social determinants of health integration, developing longitudinal health journey mapping, and implementing outcome prediction and tracking. These capabilities improve member health while optimizing resource allocation.

Create intelligent utilization management approaches by implementing context-aware medical necessity determination, developing evidence-based authorization recommendations, creating expedited review routing for urgent cases, implementing automatic approval for clear-cut cases, developing explanation generation for review decisions, creating outlier detection for unusual patterns, and implementing authorization optimization based on outcomes. These approaches balance appropriate care with cost management.

Establish advanced provider network optimization by developing patient-provider matching based on clinical needs, creating provider performance measurement incorporating multiple dimensions, implementing network adequacy monitoring and forecasting, developing targeted provider recruitment recommendations, creating provider relationship pathway analysis, implementing referral pattern optimization, and developing centers of excellence identification. These capabilities ensure members access the right care at the right place.

Claims and Payment Optimization

Develop intelligent claims processing enhancements by implementing semantic understanding of claim narratives, creating contextual code validation and correction, developing sophisticated bundling and unbundling detection, implementing clinical validation of claim-diagnosis relationships, creating multi-claim episode construction, developing provider pattern analysis and feedback, and implementing payment accuracy prediction. These capabilities improve accuracy while reducing manual effort.

Create advanced payment integrity approaches by implementing sophisticated fraud pattern detection, developing upcoding and code manipulation identification, creating cross-provider scheme detection, implementing inappropriate care pattern recognition, developing historical relationship analysis for suspicious patterns, creating explanation generation for suspected issues, and implementing prioritization of high-value investigative targets. These approaches reduce inappropriate payments while focusing investigative resources.

Establish value-based care analytics and optimization by developing comprehensive provider performance analysis, creating member attribution refinement, implementing risk adjustment accuracy improvement, developing quality measure optimization, creating care pattern variation analysis, implementing financial performance forecasting, and developing intervention ROI analysis. These capabilities support the transition to value-based payment models.

Member Experience and Engagement

Develop intelligent benefit navigation by implementing personalized benefit explanation and guidance, creating treatment option comparison with coverage information, developing out-of-pocket estimation for treatment paths, implementing in-network alternative suggestions, creating benefit utilization optimization, developing coverage verification for specific services, and implementing plan selection guidance based on individual needs. These capabilities help members maximize their benefits.

Create personalized health journey support by implementing longitudinal health management assistance, creating preventive care personalization, developing chronic condition self-management support, implementing care transition guidance, creating medication management assistance, developing appointment preparation support, and implementing recovery and rehabilitation guidance. These approaches improve member health engagement and outcomes.

Establish advanced member question answering by developing complex benefit inquiry handling, creating claim status investigation and explanation, implementing coverage determination for complex scenarios, developing specialized explanation generation for denials and policies, creating healthcare concept explanation at appropriate literacy levels, implementing multi-turn clarification for complex questions, and developing personalized recommendation generation. These capabilities improve member understanding while reducing service costs.

Operational Excellence and Decision Support

Develop intelligent document processing by implementing automated intake and classification, creating information extraction and structured data conversion, developing inconsistency identification across documents, implementing summarization for complex documentation, creating relationship extraction from unstructured content, developing knowledge base enhancement from documents, and implementing document-based decision support. These capabilities transform unstructured information into actionable knowledge.

Create enhanced business intelligence and analytics by implementing natural language querying of complex data, creating automated insight generation and prioritization, developing anomaly detection and root cause analysis, implementing cross-domain pattern discovery, creating dynamic forecasting with scenario analysis, developing narrative and visualization generation, and implementing recommendation generation for performance improvement. These approaches make analytics accessible to broader audiences.

Establish knowledge worker augmentation by developing research assistance and information gathering, creating policy interpretation and application guidance, implementing decision support with explanation generation, developing complex case analysis assistance, creating knowledge gap identification and filling, implementing process compliance verification, and developing specialized training and education adaptation. These capabilities enhance productivity while ensuring consistency.

Strategic and Market Intelligence

Develop enhanced market analysis capabilities by implementing competitive product comparison and analysis, creating market opportunity identification, developing member segment discovery and analysis, implementing provider network comparative analysis, creating regional market performance assessment, developing trend identification and forecasting, and implementing what-if scenario modeling for market changes. These capabilities inform strategic decision-making.

Create product development and optimization approaches by implementing benefit design optimization based on utilization patterns, creating premium and value alignment analysis, developing target population identification for new products, implementing cross-selling opportunity identification, creating product performance prediction, developing competitive positioning analysis, and implementing regulatory impact assessment for product changes. These approaches improve product market fit and performance.

Establish regulatory intelligence and compliance optimization by developing regulatory change impact analysis, creating compliance monitoring and gap identification, implementing regulatory submission assistance and verification, developing audit preparation and support, creating compliance risk assessment and prioritization, implementing cross-jurisdiction requirement harmonization, and developing regulatory landscape monitoring and alerting. These capabilities ensure compliance while minimizing overhead.

Organizational Alignment and Skills Development

Successful implementation of knowledge graphs and LLMs requires alignment across the organization and development of new capabilities. Healthcare insurers must invest in people alongside technology.

Leadership Alignment and Vision

Develop a compelling transformation vision by creating a clear narrative for knowledge-centric transformation, developing concrete examples of expected business outcomes, implementing value realization frameworks, creating phased transformation roadmaps, developing executive alignment sessions, implementing showcase demonstrations of capabilities, and creating communication strategies for different stakeholders. This vision aligns leadership around common goals.

Create governance structures for knowledge initiatives by establishing a knowledge strategy council, implementing cross-functional steering committees, developing clear decision rights and accountability models, creating investment prioritization frameworks, implementing value tracking mechanisms, developing risk management approaches, and creating cross-organizational collaboration models. These structures ensure coordinated action across the enterprise.

Establish business alignment approaches by developing business capability alignment with knowledge initiatives, creating business outcome responsibility assignment, implementing value stream mapping for knowledge impact, developing business process redesign approaches, creating change readiness assessment frameworks, implementing business partner engagement models, and developing business case templates for knowledge initiatives. These approaches ensure initiatives deliver tangible business value.

Capability Building and Workforce Development

Develop comprehensive skill development strategies by implementing skills assessment for knowledge-related capabilities, creating role-based learning journeys, developing formal training programs for key technologies, implementing hands-on workshops and immersive learning, creating certification programs for critical skills, developing job rotation and experience-based learning, and implementing continuous learning infrastructure. These strategies build internal capabilities systematically.

Create specialized teams and roles by developing knowledge engineering team structures, creating ontology development competency centers, implementing data science and AI centers of excellence, developing LLM prompt engineering specialization, creating knowledge graph developer career paths, implementing domain expert-technologist paired roles, and developing hybrid business-technical positions. These specialized functions provide critical expertise.

Establish knowledge sharing and collaboration approaches by implementing communities of practice for knowledge domains, creating knowledge-sharing platforms and events, developing documentation and knowledge base creation, implementing peer learning and mentoring programs, creating collaboration frameworks across functions, developing innovation challenges and hackathons, and implementing success story sharing and recognition. These approaches accelerate learning across the organization.

Change Management and Adoption

Develop a comprehensive change management approach by implementing stakeholder analysis and engagement planning, creating impact assessment for affected roles, developing communication strategies for different audiences, implementing training needs analysis and planning, creating adoption measurement frameworks, developing change agent networks, and implementing resistance management approaches. This systematic approach accelerates adoption.

Create workflow integration strategies by developing journey mapping for knowledge-enhanced processes, creating transition plans from current to future state, implementing pilot initiatives with high visibility, developing quick wins to build momentum, creating workflow and process documentation, implementing role transition support, and developing performance support tools for new capabilities. These strategies embed new capabilities into daily work.

Establish continuous improvement processes by implementing feedback collection from users and stakeholders, creating enhancement prioritization frameworks, developing user experience refinement processes, implementing performance monitoring and optimization, creating success metrics and dashboards, developing innovation forums for new ideas, and implementing regular retrospectives and learning cycles. These processes ensure sustained value creation over time.

Implementation Roadmap and Change Management

Successful transformation requires a structured implementation approach that delivers incremental value while building toward comprehensive capabilities. Healthcare insurers need a roadmap that balances quick wins with long-term strategic objectives.

Strategic Roadmap Development

Create a multi-year transformation roadmap by establishing clear phases with distinct objectives, developing capability evolution across phases, creating use case sequencing based on value and complexity, implementing technical foundation buildout planning, developing organizational change synchronization, creating risk mitigation planning, and implementing dependency management across initiatives. This roadmap provides a clear path forward.

Develop value-based prioritization frameworks by implementing business impact assessment methodologies, creating complexity and effort estimation approaches, developing organizational readiness consideration, implementing technical dependency analysis, creating risk assessment for initiatives, developing balanced portfolio approaches across domains, and implementing adjustment mechanisms as conditions change. These frameworks ensure resources focus on highest-value opportunities.

Establish progress measurement approaches by developing key performance indicators for initiatives, creating value realization tracking mechanisms, implementing milestone and deliverable tracking, developing capability maturity assessment, creating technical debt monitoring, implementing periodic roadmap reviews and adjustments, and developing executive dashboard reporting. These approaches maintain momentum and accountability.

Foundation Implementation

Develop a technical foundation establishment plan by implementing graph database platform selection and deployment, creating initial ontology development and validation, developing core data source integration, implementing identity resolution framework implementation, creating API and service layer development, developing security and compliance foundation, and implementing initial developer tooling and support. This foundation enables subsequent capabilities.

Create initial use case implementation by developing proof of concept for selected use cases, creating minimal viable product definitions, implementing pilot deployments with limited scope, developing feedback collection and refinement processes, creating transition planning from pilot to production, implementing value measurement frameworks, and developing scaling approaches for successful pilots. These initial implementations build momentum and credibility.

Establish data and integration foundation by developing master data alignment with knowledge graph, creating source system integration prioritization, implementing data quality remediation for critical sources, developing incremental graph population approaches, creating metadata and lineage implementation, implementing data governance operationalization, and developing data pipeline monitoring and management. This data foundation ensures reliable information for knowledge applications.

Scaling and Expansion

Develop expansion strategies across business domains by implementing capability expansion sequencing, creating cross-functional implementation teams, developing centralized support and enablement, implementing reusable pattern identification and promotion, creating enterprise standards evolution, developing cross-domain integration planning, and implementing knowledge sharing across initiatives. These strategies systematically expand capabilities across the enterprise.

Create advanced capability development approaches by implementing research and innovation processes, creating technology evaluation frameworks, developing proof of concept processes for emerging capabilities, implementing partnerships with technology providers, creating academic collaboration for specialized capabilities, developing talent acquisition for advanced skills, and implementing intellectual property protection strategies. These approaches keep the organization at the cutting edge.

Establish enterprise adoption acceleration by developing change champion networks, creating success story communication, implementing user feedback collection and incorporation, developing training program scaling, creating incentive alignment for adoption, implementing removal of barriers to adoption, and developing measurement of adoption progress. These activities drive organization-wide utilization.

Continuous Evolution and Optimization

Develop ongoing optimization processes by implementing performance monitoring and improvement, creating user experience refinement based on feedback, developing cost optimization initiatives, implementing technology refresh planning, creating technical debt management, developing process refinement based on analytics, and implementing continuous testing and validation. These processes ensure systems improve over time.

Create knowledge expansion frameworks by implementing knowledge gap identification processes, creating knowledge acquisition prioritization, developing automated knowledge extraction expansion, implementing external knowledge integration planning, creating subject matter expert knowledge capture, developing knowledge quality improvement initiatives, and implementing knowledge coverage metrics and tracking. These frameworks systematically expand the knowledge base.

Establish innovation management approaches by developing emerging technology monitoring, creating innovation testing frameworks, implementing idea generation and capture processes, developing innovation funding models, creating rapid prototyping capabilities, implementing transition processes from innovation to production, and developing intellectual capital management. These approaches ensure the organization continues to evolve its capabilities.

Performance Measurement Framework

To ensure knowledge graph and LLM investments deliver sustainable value, healthcare insurers must implement comprehensive measurement frameworks that connect technical metrics to business outcomes.

Business Value Measurement

Develop comprehensive financial impact assessment by implementing cost reduction measurement, creating revenue enhancement tracking, developing operational efficiency quantification, implementing quality improvement valuation, creating time-to-value acceleration measurement, developing risk reduction quantification, and implementing resource optimization tracking. These assessments demonstrate tangible returns on investment.

Create member impact measurement approaches by implementing experience improvement metrics, creating health outcome tracking methodologies, developing engagement enhancement measurement, implementing satisfaction and loyalty metrics, creating access improvement quantification, developing personalization effectiveness measurement, and implementing member effort reduction tracking. These approaches ensure member benefits are captured.

Establish operational excellence measurement by developing process efficiency metrics, creating decision quality improvement tracking, implementing cycle time reduction measurement, developing exception handling reduction quantification, creating straight-through processing rate improvements, implementing resource utilization optimization, and developing compliance accuracy enhancement tracking. These metrics capture operational benefits.

Technical Performance and Quality

Develop comprehensive technical performance measurement by implementing response time and latency tracking, creating throughput and capacity metrics, developing availability and reliability measurement, implementing resource utilization monitoring, creating scalability performance tracking, developing batch processing efficiency metrics, and implementing performance under load testing. These measurements ensure technical systems meet requirements.

Create knowledge quality assessment frameworks by implementing coverage completeness metrics, creating accuracy and correctness measurement, developing consistency evaluation approaches, implementing freshness and currency tracking, creating relevance assessment methodologies, developing usability measurement for different contexts, and implementing trustworthiness evaluation. These frameworks ensure knowledge assets meet quality standards.